Should your organisation do unconscious bias training?

On any given day, we have to make a lot of decisions.

Some simple, some a little more complex.

Luckily, our brains have developed mental shortcuts, so we don't have to think about every last detail. And most of the time they work rather well. Unfortunately though, there's times when these shortcuts can trip us up, especially with complex decisions, like recruitment.

Of course, no one likes to think they're making biased decisions.

And it would be easy enough if we could just turn our biases off.

Unfortunately, research has shown time and time again, that even when you're told that bias might affect your decisions, it still has the same effect.

The only way to avoid bias altogether, is to stop feeding your brain information that leads to bias in the first place, like removing a name from a CV.

This is just the tip of the iceberg.

Having the right technology can have a huge impact on your recruitment. And this is where Applied can help.

By focusing on what's important, you make better judgments on who's best suited for the role and as a consequence, gain a more diverse workforce.

Unconscious bias training has become very, very popular

Even before BLM and the current wave of interest, US corporates were already spending $8 billion a year on diversity training programmes, an absolutely extraordinary amount.

If you look at what's happened to the Google results for unconscious bias training, you can see that it's actually spiked.

As the Black Lives Matter movement took off in its current form, you can see that there was an absolutely huge spike in people searching for unconscious bias training. It's mostly in the United Kingdom, Australia, United States, Canada and the English speaking world, which is not entirely surprising.

And what's also interesting is you can already see it starting to dip.

So it may not continue at its current levels forever but it's still still hugely popular. People were still interested even before this happened and spending an extraordinary amount of money on it.

And it has now reached the very highest level.

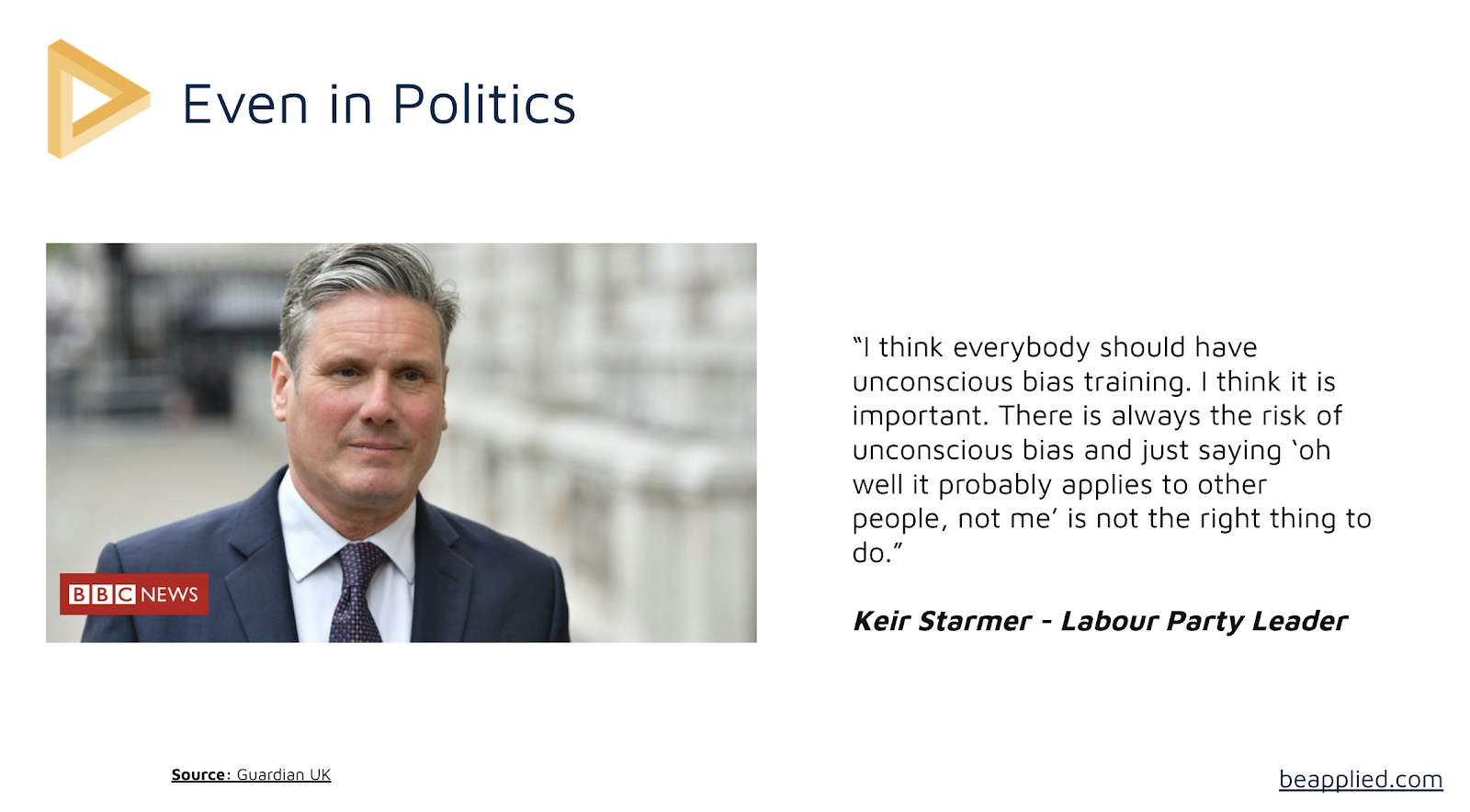

For those of you who don't know, Keir Starmer, the head of the Labour Party in the UK, got into trouble for saying some things a few months ago.

His response was to do unconscious bias training:

So this is a way of thinking when dealing with diversity issues that's hit the very highest level of British politics.

And whether the same happens in the States and elsewhere, I don't know, but we're certainly going for it here in the UK.

What is unconscious bias?

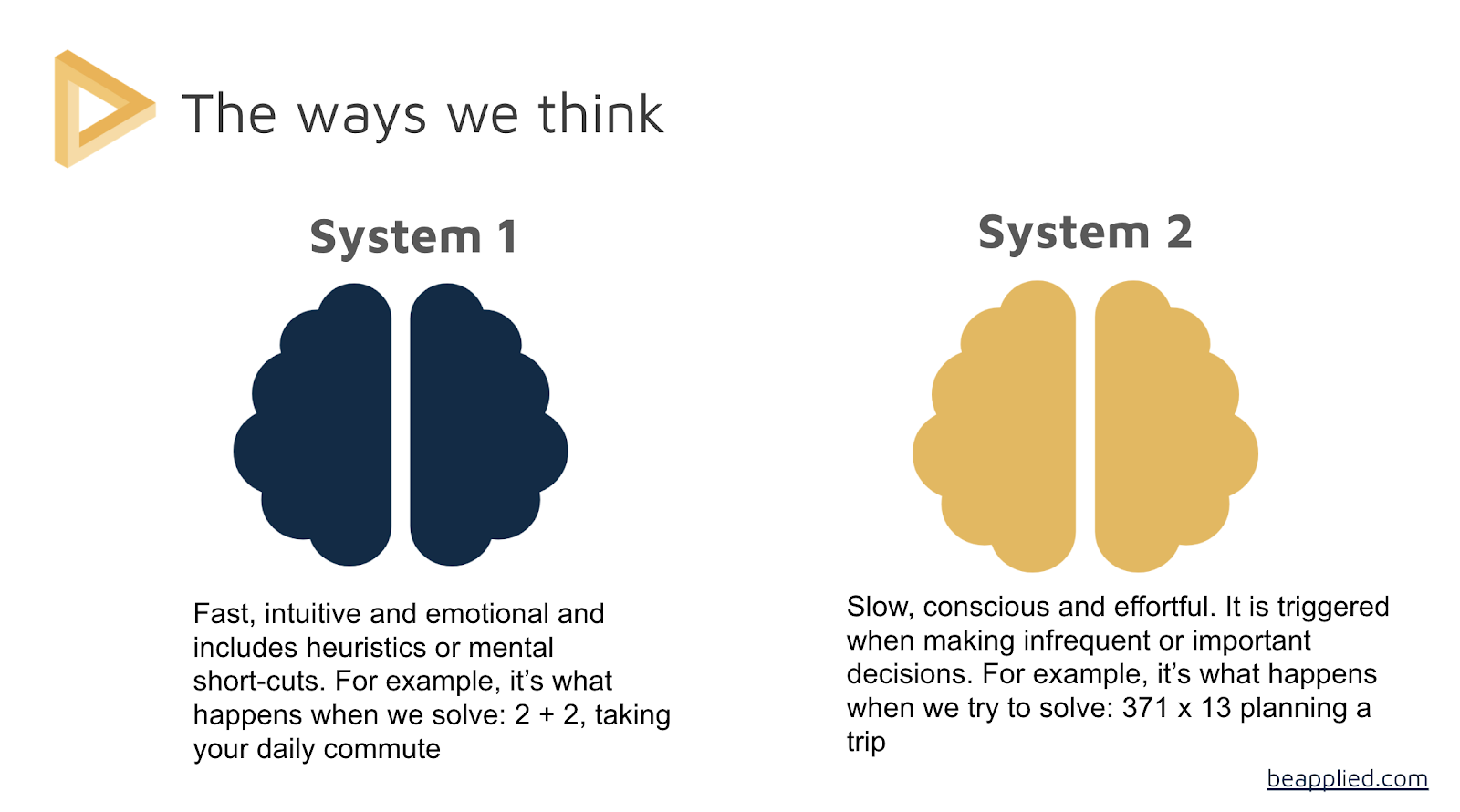

So the concept of unconscious bias comes out of the research of Kalama and Sunstein (and others). It essentially postulates (and it's been proven - it's won Nobel prizes) that we think in two ways, system one and system two.

System one is your fast, intuitive and emotional reactions to things. It's how you know that two plus two equals four, people dress in a certain ways (policeman or bus conductors) and it's how you can walk home without really paying attention to where you're going.

It's all the intuitive decisions that you make on a day to day basis.

It's easy, and includes a lot of mental shortcuts.

System one isn't bad. And I want to make that very clear. System one is what allows you to operate and actually function in the world.

System two is the slow consciousness conscious and effortful thinking.

It's triggered when making infrequent, important decisions.

When you take a complicated route, rather than just your daily commute. When you're planning a trip or working, hoping to get somewhere, it’s how you solve difficult problems.

One way to think about it is: some system one thinking just seems to appear in your brain, almost like someone else put it there, you don't have to be told someone looks happy, you just sort of know they look happy.

System two is when you have to think about it, it's conscious. And what we mean by unconscious bias quite often is when the system one sort of shortcuts and makes decisions that aren't necessarily optimal.

And quite often, unconscious bias occurs when we should be using system two, but actually fall back on using system one.

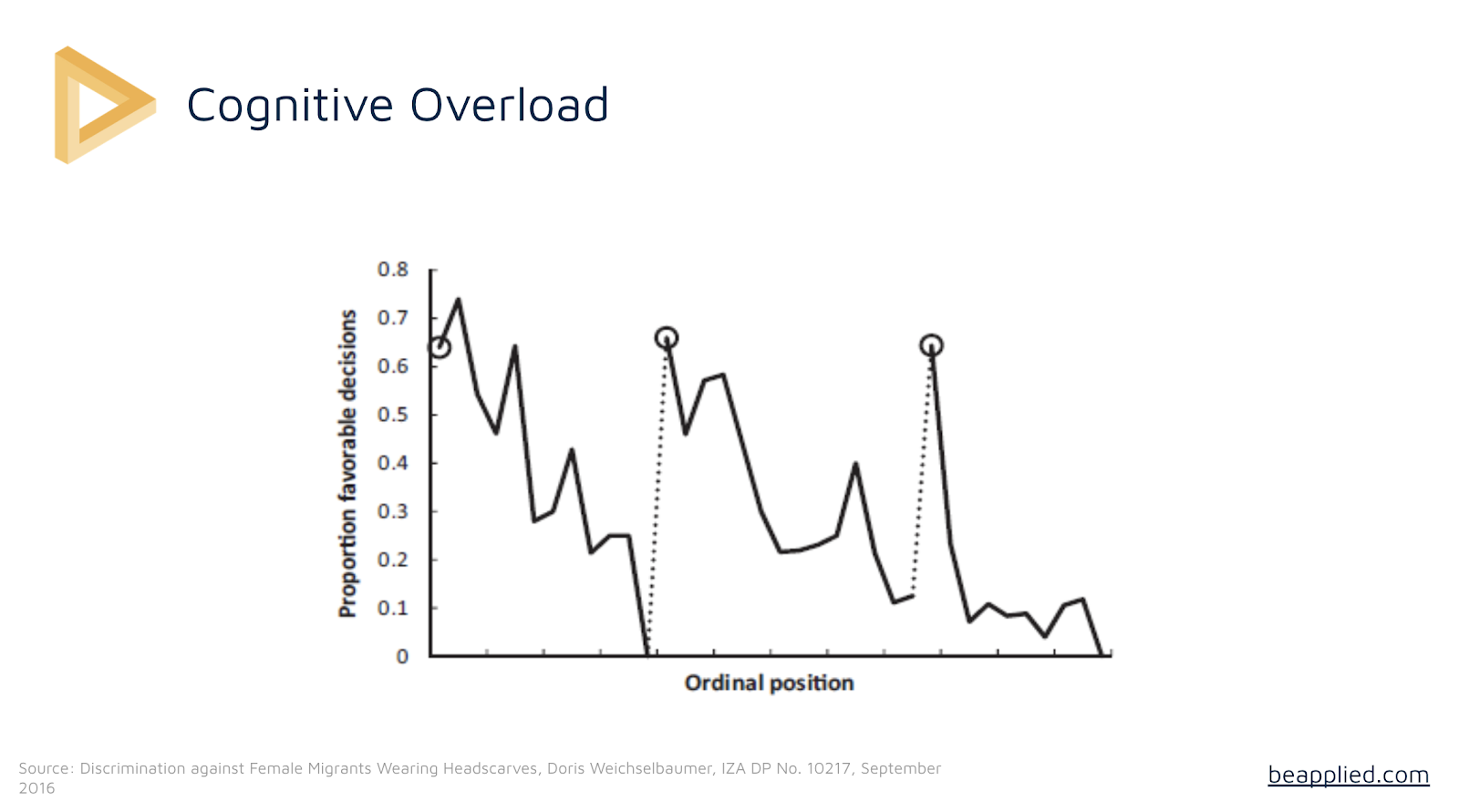

An example is cognitive overloads.

This is from a very famous study of Israeli judges, looking at whether they're going to get people parole:

You can see that chances of giving someone a parole decision start off very favourable but declined over time.

And then if they have a break, it goes back up.

So what's happening here is that they're starting off with lots of energy, they're making effort, they're making thoughtful, conscious decisions.

Then as time goes on, they get tired, so they start doing the safe, easy thing. They start going off of their mental shortcuts, so they start becoming less and less favourable.

So that's an example of what we call cognitive overload. It's where you're making so many system two choices that you have to give up and use system one.

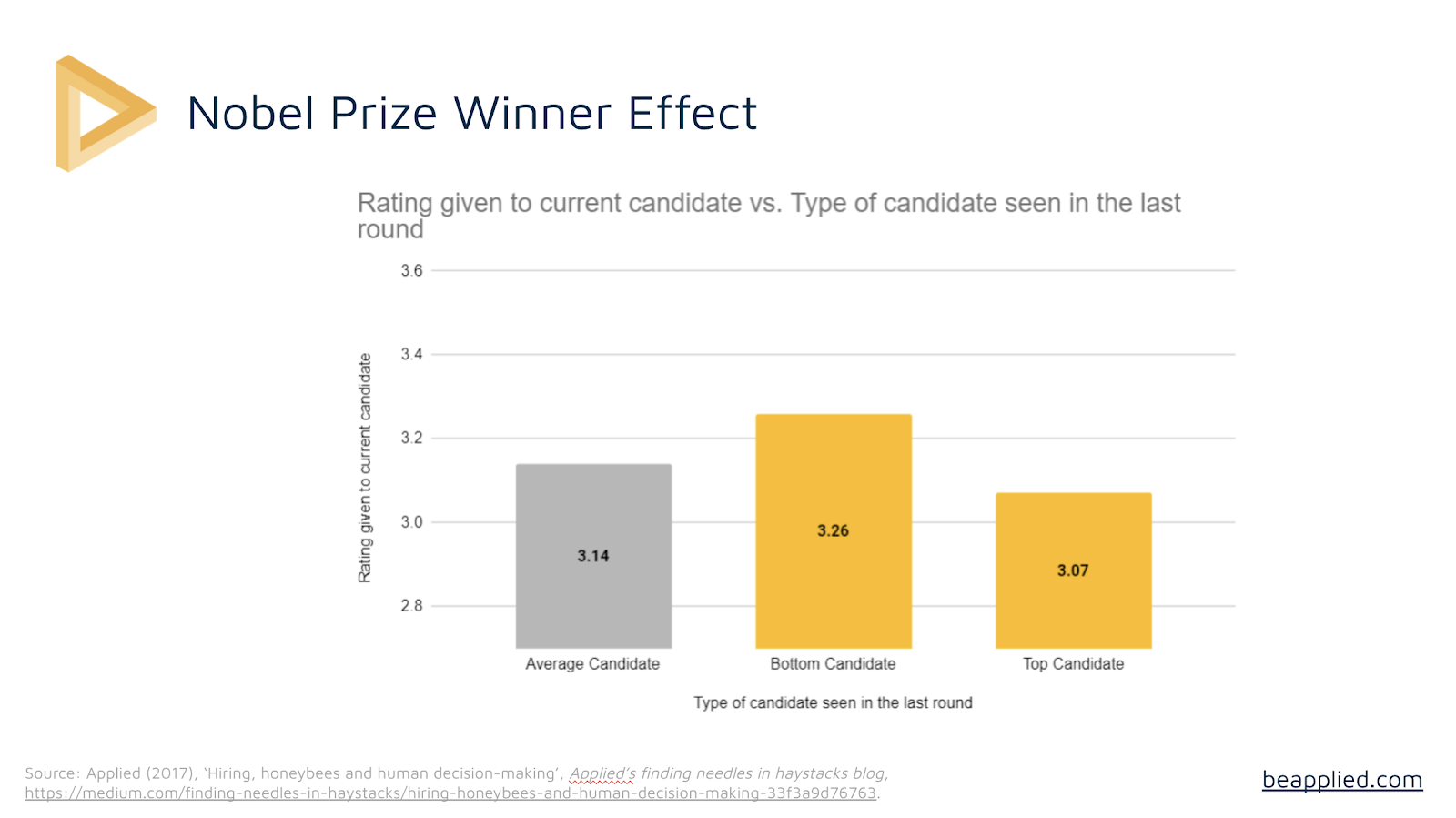

Another example is the Nobel Prize winner effect:

If you're rating candidates in a recruitment context, a lot of your system one thinking has to do with what you've seen before and straight after.

So if you saw someone who wasn't a very good candidate, you rate the next candidate much more highly.

And if you see someone who's an absolutely brilliant candidate, you will judge the next person more harshly.

So that's sort of a contextual kind of system one. System one is basically how we make sense of the world - comparing things with things we've just seen.

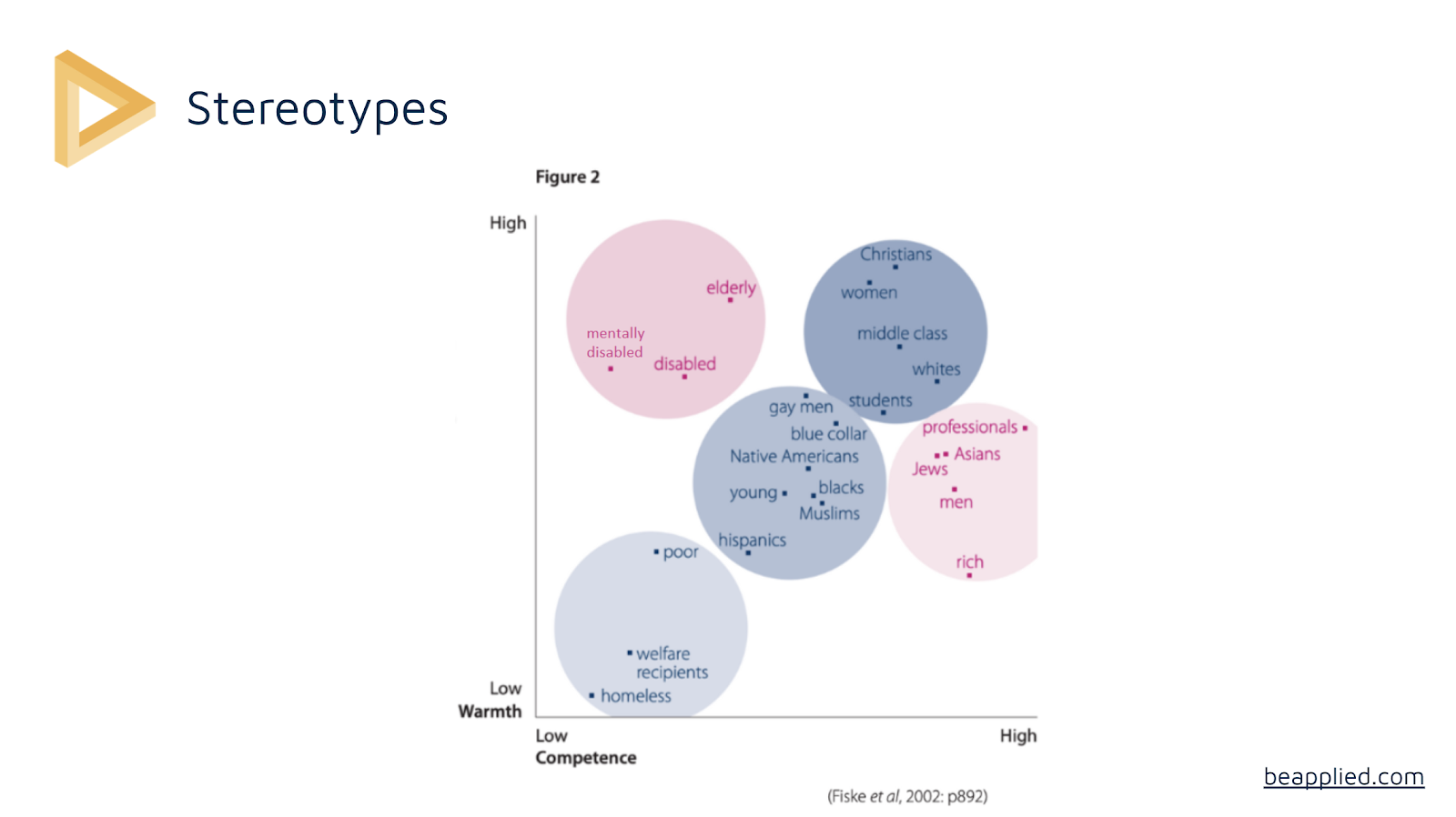

Another type of unconscious bias is stereotypes. This is all of the assumptions that we bring to people, depending on how they look or what we perceive about them.

You can see in this particular study that we judge how warm, friendly and competent we think someone is just by what they look like.

So, for example, we look at homeless people as being low warmth and low competence and the Christians, women and middle class we think of as warm and highly competent.

And then some people like men, we think of as highly competent, but we don't think of them as particularly warm and vice versa… you get the idea.

So when we don't have any extra information to go off, we default to system one and build up assumptions and stereotypes in our mind.

What is unconscious bias training?

This is from the Human Rights Commission UK:

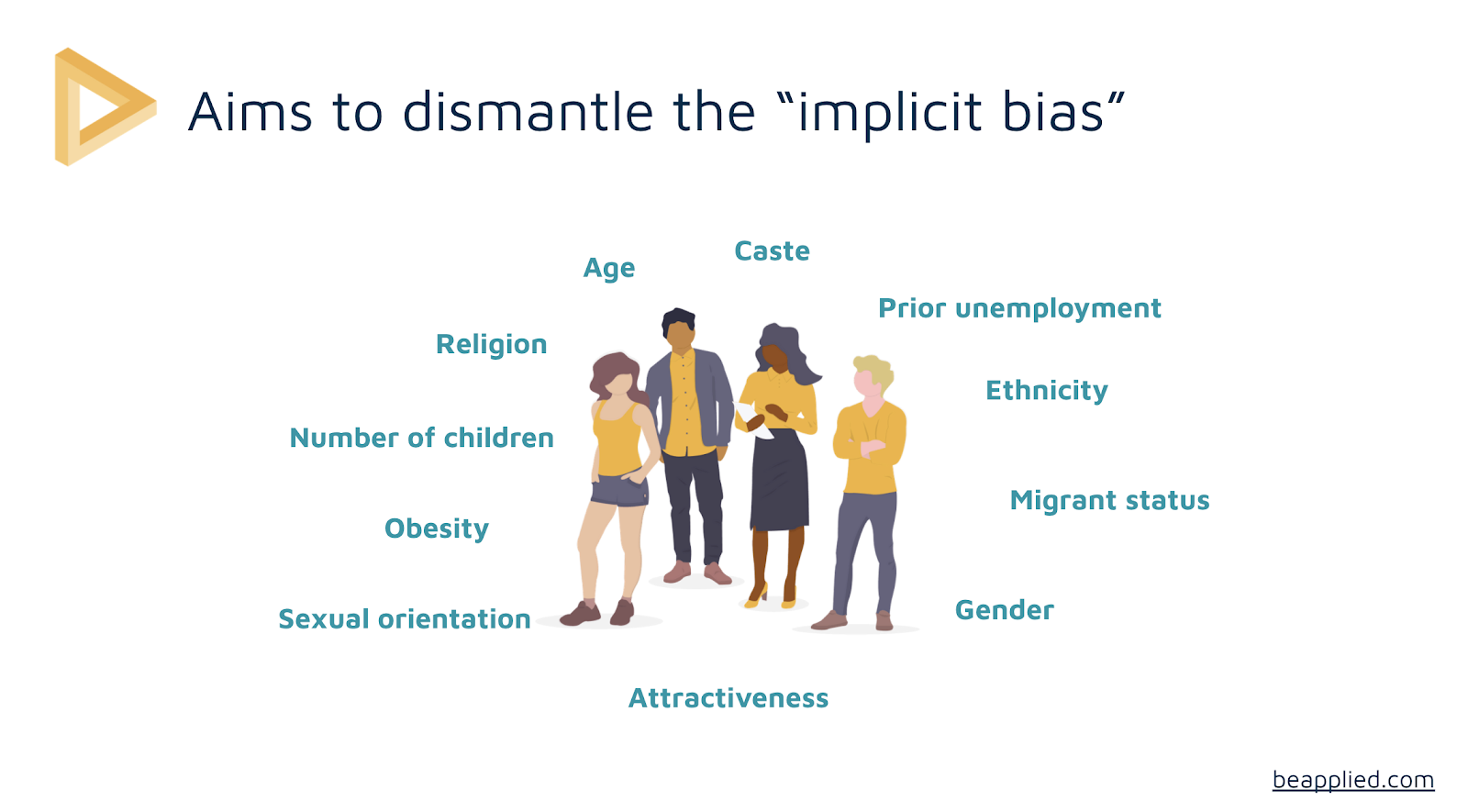

So if you think about the examples of unconscious bias we just looked at, the training is actually only aiming at dealing with a very specific type of unconscious bias - and that's stereotype bias - the stereotypes and assumptions we bring about particular groups.

There are actually over 200 or so cognitive biases that can interfere with recruitment processes and people decisions.

But unconscious bias training isn't really dealing with most of them.

It's not dealing with things like ordering effects - for example, how we're much more preferable to the first people we see than the last people, or peak-end effects: we remember the first and the last people we interview, we don't seem to remember the people in the middle.

All unconscious bias training aims to deal with is these implicit biases, these assumptions we make about people, not all the biases that exist.

So even if you were to do everything that unconscious bias training says you should do, you could still have an incredibly biassed process.

They deal with some sort of secondary effects, but always through this lens of stereotypes.

So affinity bias, for example, is the fact that we're attracted to people like us. I, as a white man, may feel more affinity towards other white men.

Now, unconscious bias training might tell you to watch out for that, as you're basing that on a stereotype. I might then say “okay”, and I might choose someone else. But I might still choose someone who went to the same university as me, even if they're a different ethnicity or gender or a different sexuality.

So if unconscious bias training can get rid of your propensity to have implicit biases, according to stereotypes, that is amazing and we should all be doing it.

Unconscious bias training is usually targeted around how implicit bias affects people's age, religion, segmentation, gender, ethnicity, all these sorts of things.

And that's important. It's really important because actually, those stereotypes, infinity bias, and top level implicit biases are really harmful.

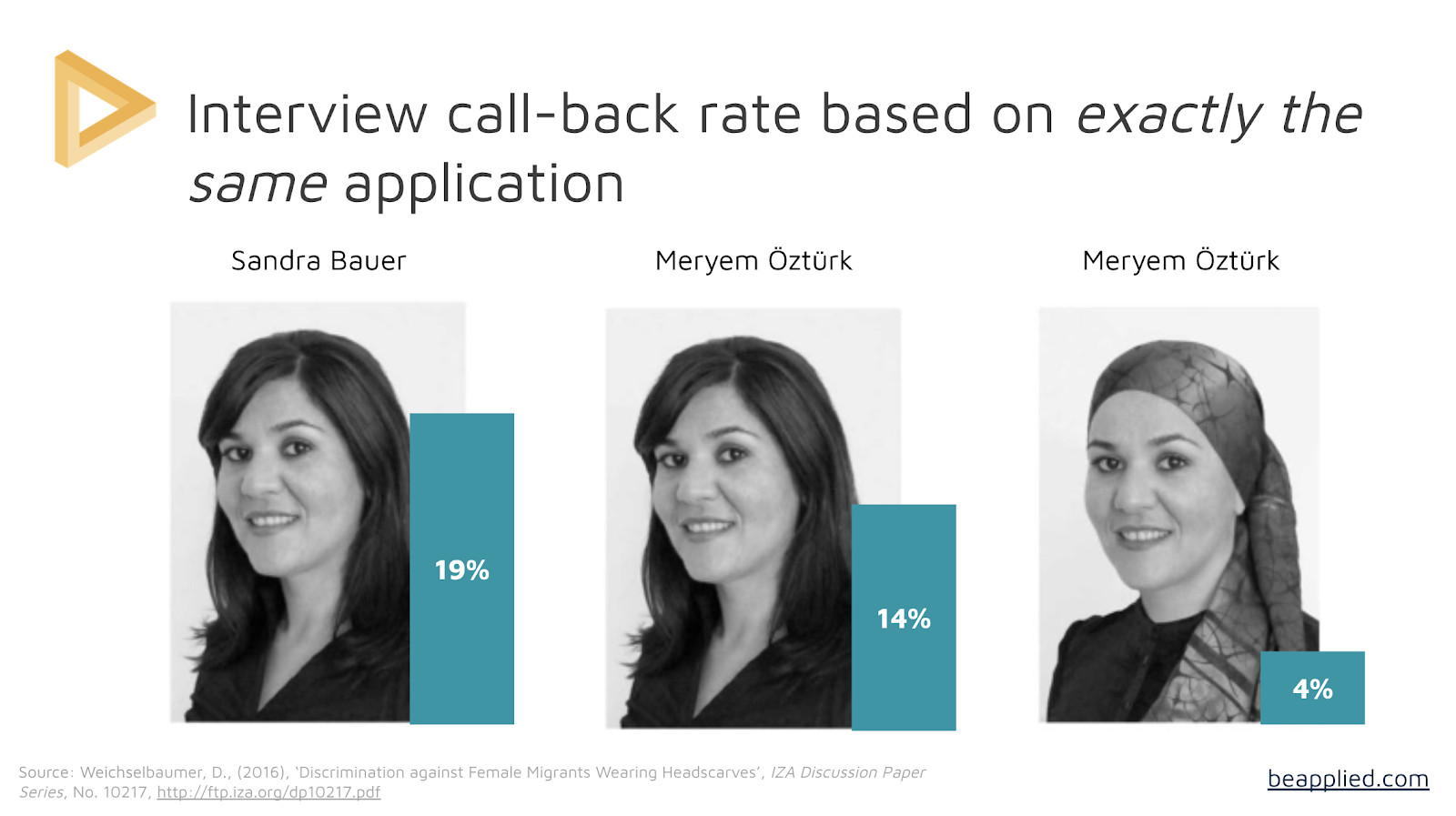

A classic example is looking at callback requests for the people who send identical CVs, but just with a different name or a different picture.

In this example, an identical CV was sent out to thousands and thousands of businesses with a classic German name (this particular study was done in Germany), a classic Turkish name, or a classic Turkish name with someone wearing a headscarf.

Callback rate went down from 19% to 4%, depending on the name and the picture. So this is an example of implicit bias being extraordinarily harmful to diversity aims - and it's true across the world.

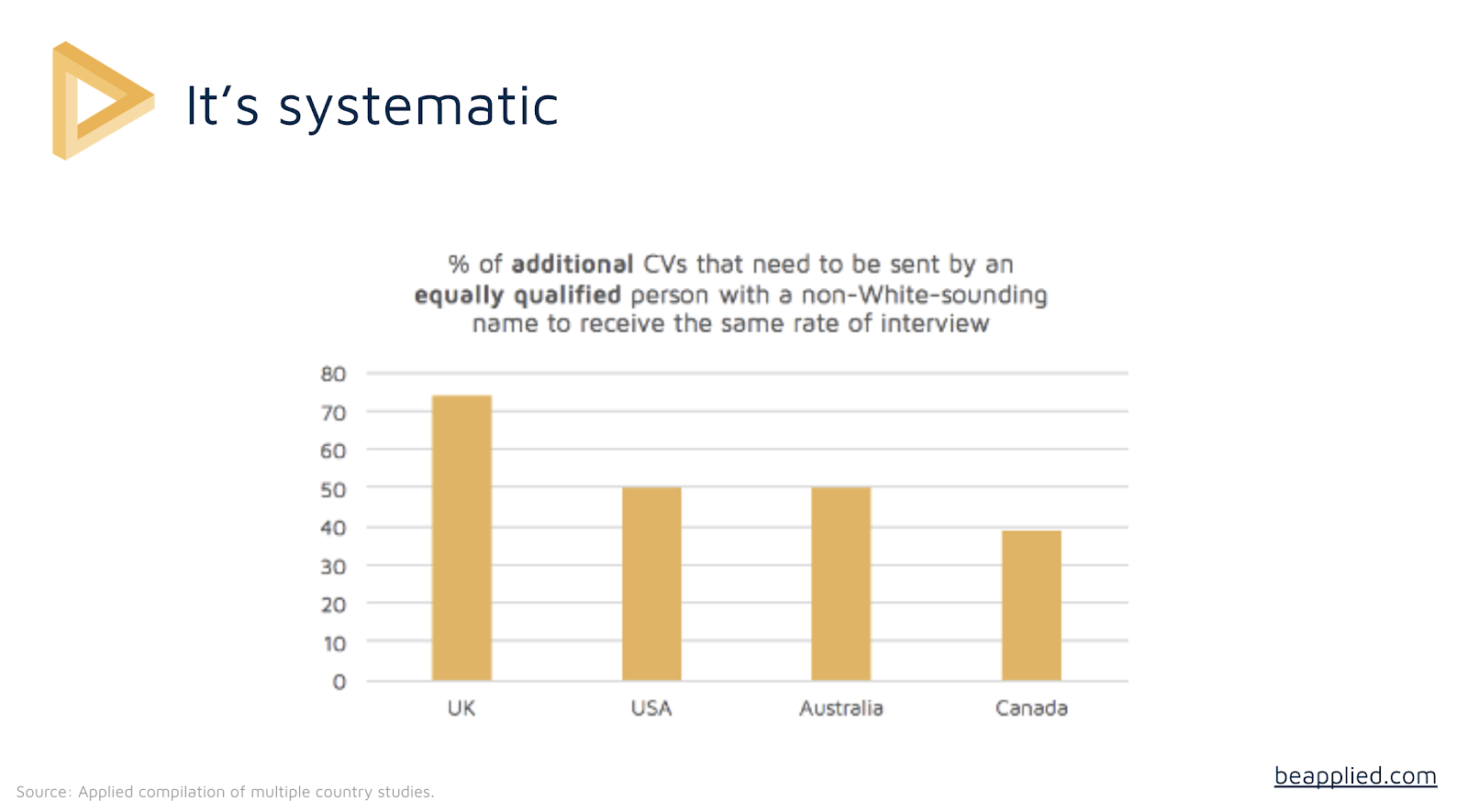

So this is the percentage of additional CVs you need to send as an equally qualified person with a non-white sounding name:

So here in the UK, it's really appalling, actually.

We're the worst at 72% - the USA is 50%, Australia, also 50% and Canada 40%.

No one is looking great in terms of not having a bias selection process at that initial screen - and there's not much evidence that it is getting better.

If you look at the trends over time for job applications and people of Pakistani heritage UK, you can see that it's remarkably unchanged.

If you look at the ratio of callbacks, or black applicants versus white applicants in United States, you can again see it's unchanged.

.png)

It's not just to do with recruitment processes.

Obviously, we can build recruitment platforms but biases continue to affect people long after they enter the workforce.

In a really famous study by James Fanning - two of the largest stockbrokers in the US were sued in a class action lawsuit, because the female stockbrokers were only earning 60% of what the male stockbrokers were earning.

It was mostly to do with commission. So their argument was that men are better stockbrokers than women - this isn't discrimination, this is just innate ability - it's not our fault.

But actually, when they dug into the data, it turned out that the women stockbrokers were being given inferior leads and inferior accounts. As soon as they corrected that, the gap closed.

So as you can tell, this implicit bias can affect careers well past the recruitment stage.

What does unconscious bias training look like?

If I signed up to do unconscious bias training, what would it look like?

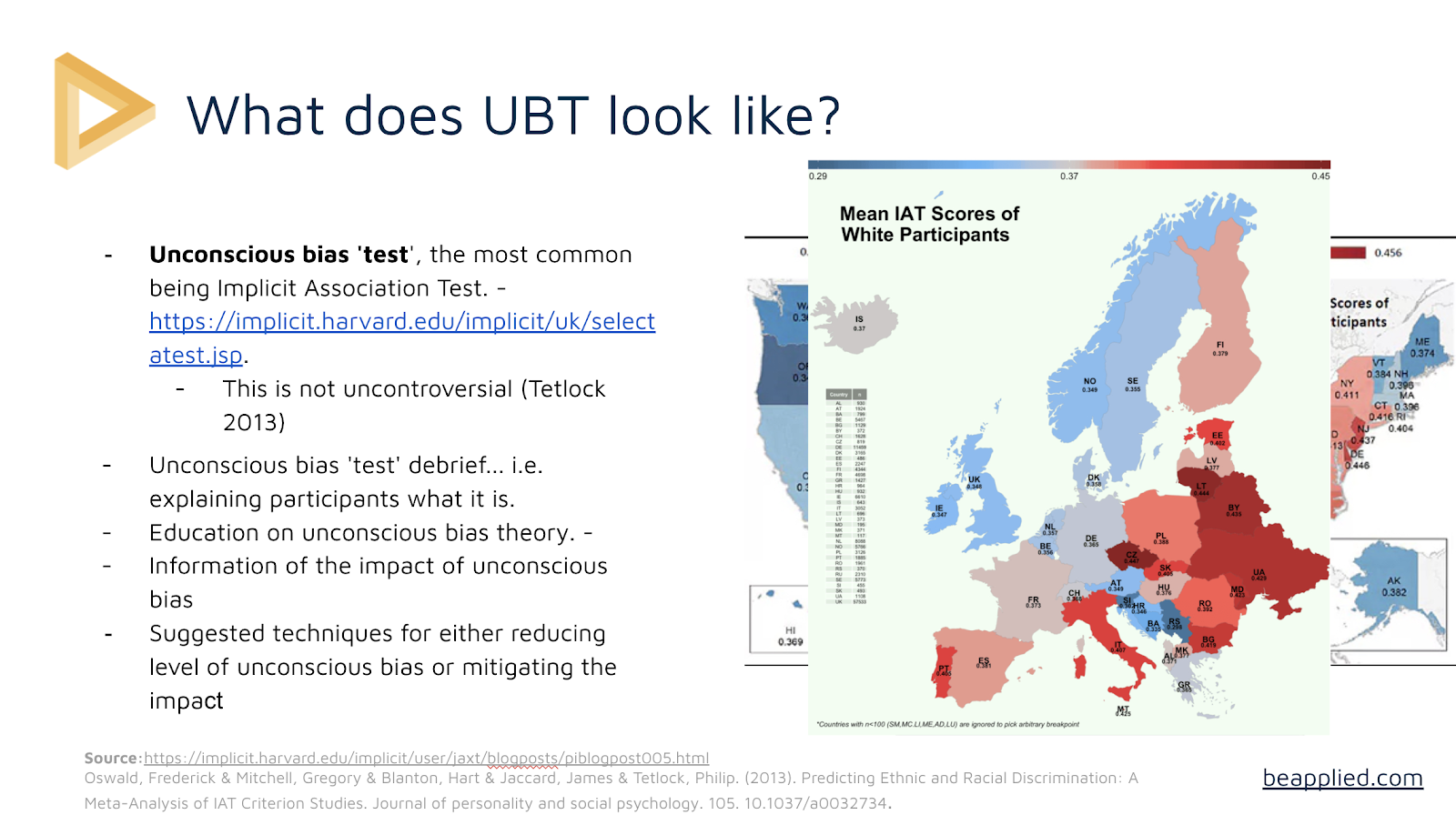

Since you have probably done this before, I won’t dwell on this too much, but the standard technique is to start with an unconscious bias test.

This is a test which is going to tell you how much implicit bias you have and how many of these stereotypes or hidden assumptions about people are in your psyche.

The most famous one is the Harvard Unconscious Bias Test. It has you look at lots of white faces, for example, and black faces. You have to match them with various concepts - positive or negative, or various stereotypes. Depending on how quickly you can match different faces with different concepts, it reveals your implicit bias.

Now, this is not uncontroversial. I'm not going to dig into the controversy around implicit bias tests, because it's a deep academic controversy and I also think that there is a good case to be made that it definitely reveals something about bias in societies in general.

It's the basis of all this unconscious bias training. Typically what you get is a bunch of people who say they're not biased, who you get to do a test like this. It reveals that they are in fact biased and then the training goes from there.

So it has people slightly on the back burner, showing that they're biased from minute one.

If you look in the world where people tend to score poor most poorly, on the Implicit Association Test, you can see it is places where clearly there is the most discrimination geographically.

But before you feel too smug, if you're in a blue state - Norway’s 0.349 shows that there's a significant amount of discrimination as a pro-white feeling.

So after the unconscious biases, the training will then suggest techniques for reducing or mitigating the impact.

And there you have it - this is what your average unconscious bias session looks like.

Now, often these days, there’s a lot of other things mixed in. There might be a lot of discussion around, for example, white privilege, or white fragility, and case studies of individual stories.

All this stuff can be great. But it's not unconscious bias training.

It's mixed in with unconscious bias training as part of an overall session - and there's nothing wrong with that.

But it's part of the critical theory and a whole different branch of academia, which is lumped in together.

Does unconscious bias training work?

This is a really key question.

Because if it does work, we should all do it.

Bias obviously has a huge impact on fairness in businesses and it’s believed that it's rife in our societies all over.

So we're going to evaluate on its own terms - we're not going to evaluate it for what it doesn't do, we're going to evaluate it for what it claims to do and what it wants to do: raising awareness and teaching methods to alleviate unconscious bias or implicit bias.

I'm going to call on loads of studies and try to get a balance… then I'll draw some conclusions.

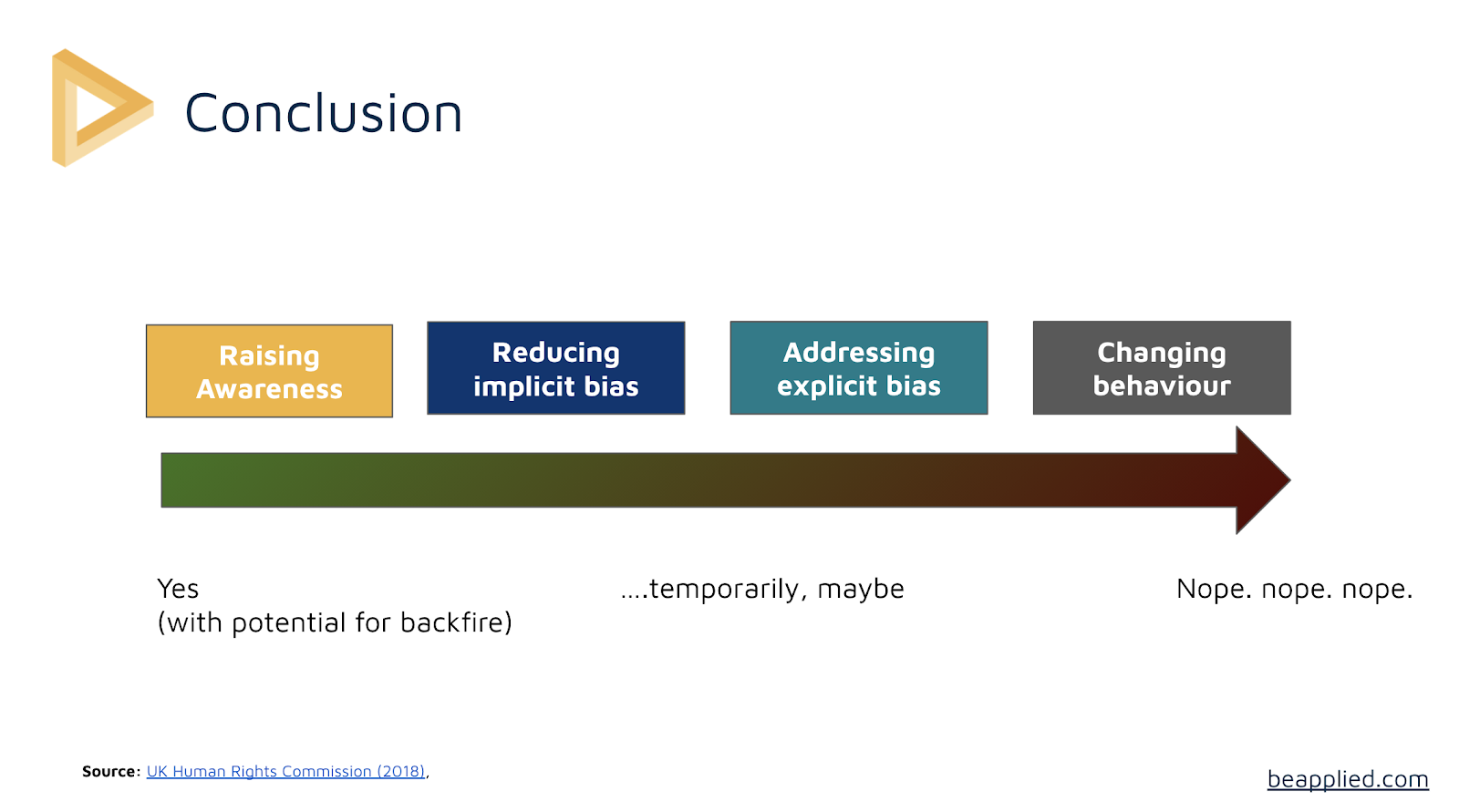

Let's break it down into the different things it might be able to do or claims it can do.

The first is raising awareness - just making people aware that they have biases and that discrimination is a problem in the workplace actually

Then there's reducing implicit bias.

And there's also addressing explicit bias.

So implicit biases are these stereotypes and assumptions we're bringing to something without even realising we are doing it.

And if you've got that awkward uncle who hears some racist or sexist trope, and then under his breath mutters, "but it's kind of true, isn't it?" - that's an explicit bias, as opposed to implicit bias that a lot of us have, without even realising we're having it.

And the last one is changing behaviour. And changing behaviour is what we're really in it for.

Raising awareness

So, in terms of raising awareness, there’s actually quite good evidence that it does raise awareness and that people become more aware of bias.

Houseman and Capers showed self reporting increases awareness of unconscious bias.

The Moss-Racusin study shows that training heightens ability to detect the gender diversity of an environment. So before the unconscious bias training, the group might not have even been aware that they were in a room which was nine men and one woman. But afterwards, at least they started to recognise that there were no women there, which is a good thing.

As for Carnes, this is also interesting. Training was shown to raise awareness and actually provide motivation to remedy the problem. I was actually surprised by these findings and that it goes beyond just raising awareness.

So I think we can say in terms of raising awareness with good evidence that unconscious bias training can be good - and even a motivation to address issues. What we're going to cover later is that some of that awareness might not necessarily be good.

Reducing implicit bias

In terms of reducing implicit bias, the evidence is mixed - but it's not great, to be honest.

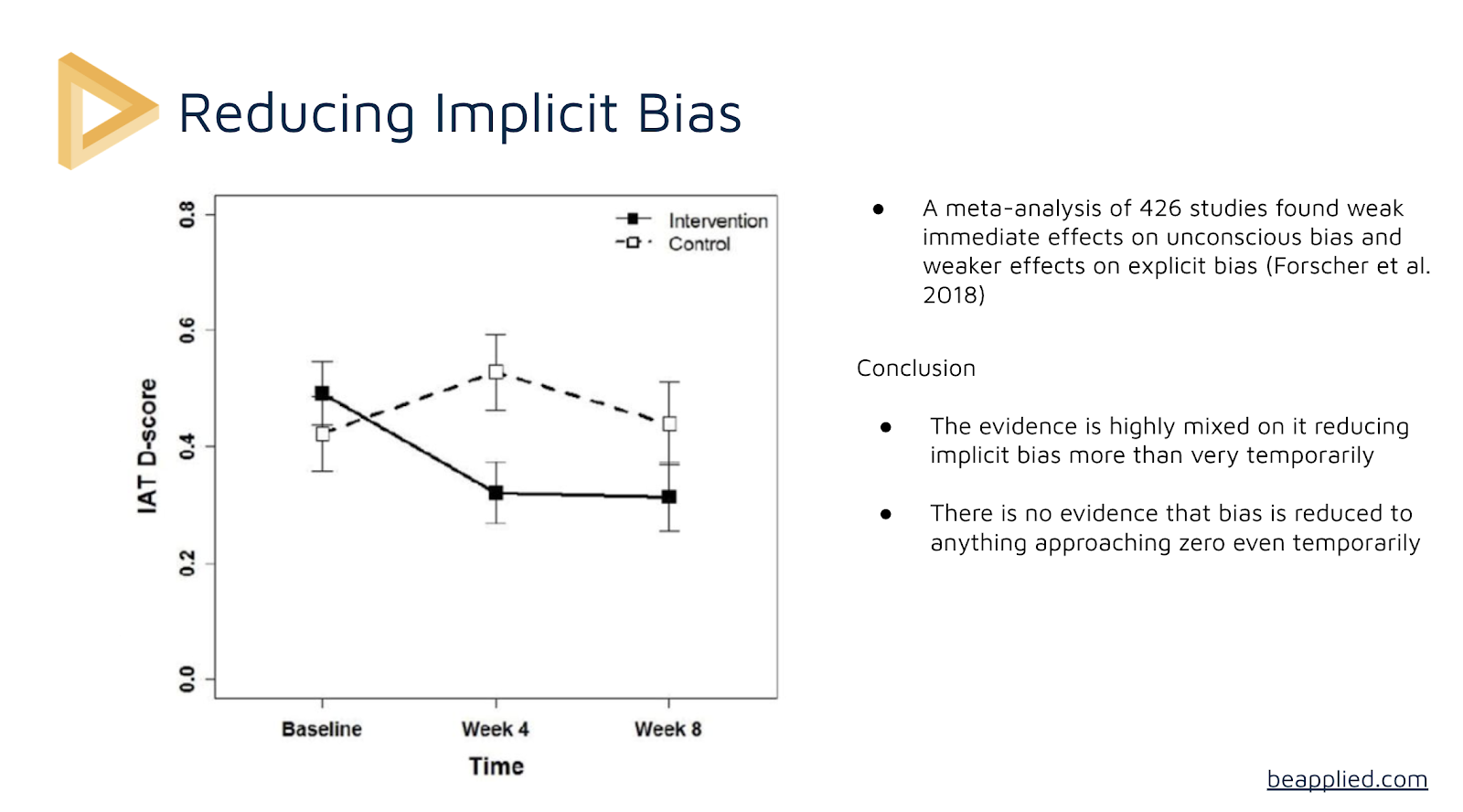

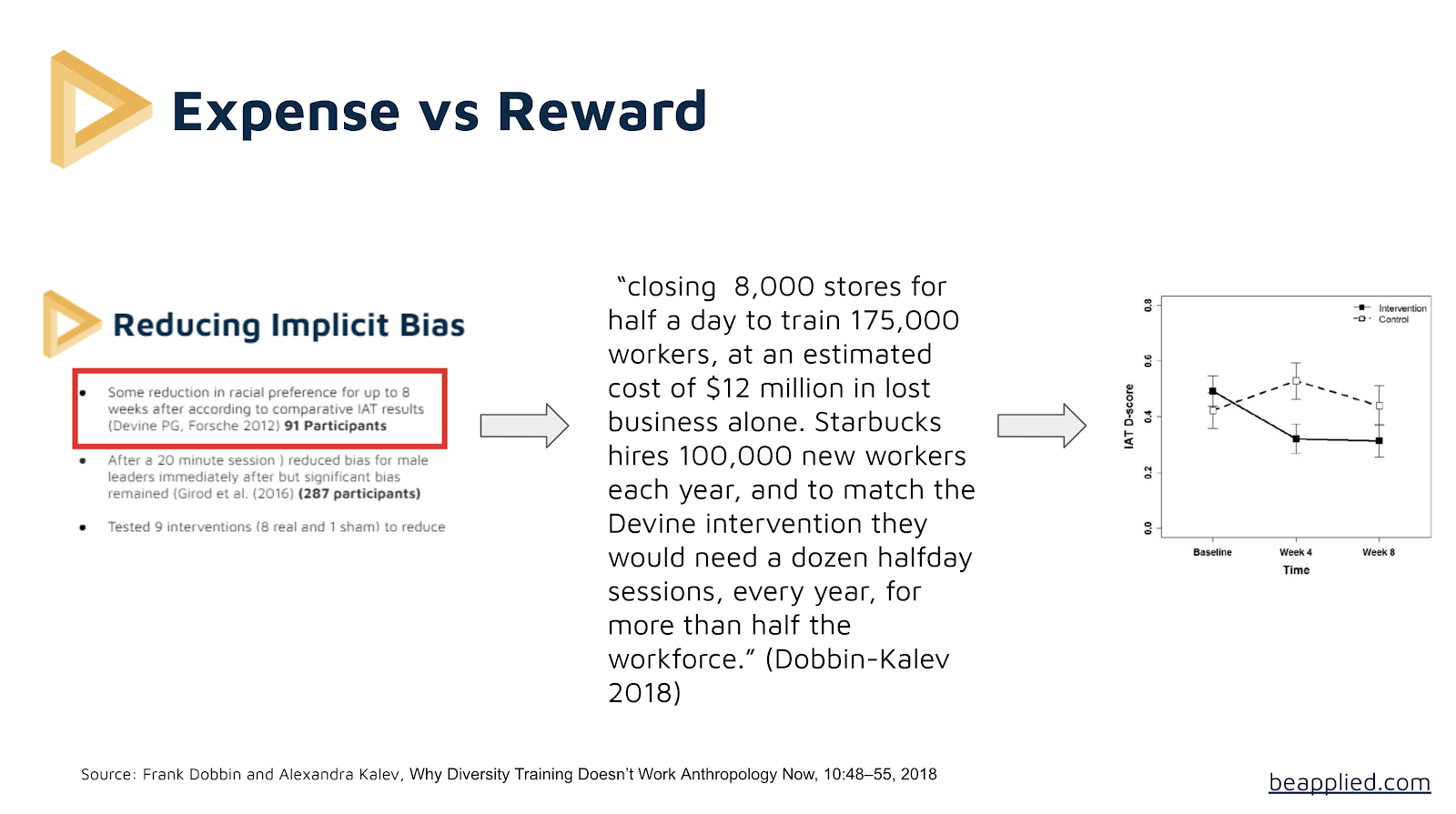

So the most positive study is this one: some reduction in racial preference after eight weeks, according to a comparative implicit bias test with 91 participants.

This study has been a big supporter of unconscious bias training and is often quoted.

But some of it is less positive. After a 20 minutes session, bias was reduced in male leaders immediately. But significant bias remained. Out of 287 participants none were unbiased after a few days.

So there was an immediate decision made afterwards with slightly less bias since they did better on the Implicit Association Test, but after a couple of days it had worn off.

And a meta analysis of 426 studies found that although there was some immediate effect on unconscious bias (albeit very weak), it disappeared over time.

So there's some evidence that you can reduce implicit bias for a period of time, maybe up to eight weeks, but there's absolutely no evidence that bias is reduced to zero, even temporarily.

On the chart above, you can see the reduction in Implicit Association scores for the intervention group (the group that had the training).

The black line is the one which had the intervention (compared to the training-free control group).

Granted, there is some reduction at eight weeks, although I'm not sure I would see this as a massive success, at least not by itself.

Reducing explicit bias

And so how about explicit bias? This is the people who actually do believe stereotypes consciously and actively.

In these cases unconscious bias training has no effect.

In fact, there are reports of increased animosity, anger and resistance.

People actually activate stereotypes that they hadn't even thought about for a while and become more racist and sexist - it gets their back up!

In one study, white subjects read a brochure critiquing prejudice towards blacks.

When they felt pressured to agree, the reading strengthened that bias against blacks.

So there is also quite a lot of strong evidence to suggest that if you make diversity training voluntary, it actually has a more positive effect than if you make it mandatory.

There’s a bunch of sources from a variety of studies in the image above, because some people are very sceptical about unconscious bias training - I’d suggest checking out the literature for yourself.

But it's clear that unconscious bias training is definitely not the way to tackle explicit bias.

Change in behaviour

We're going to work on the assumption that most people's bias is implicit rather than explicit (which some people would say is wildly optimistic).

So let's imagine for a second that it is mostly implicit - what is the change in behaviour we see?

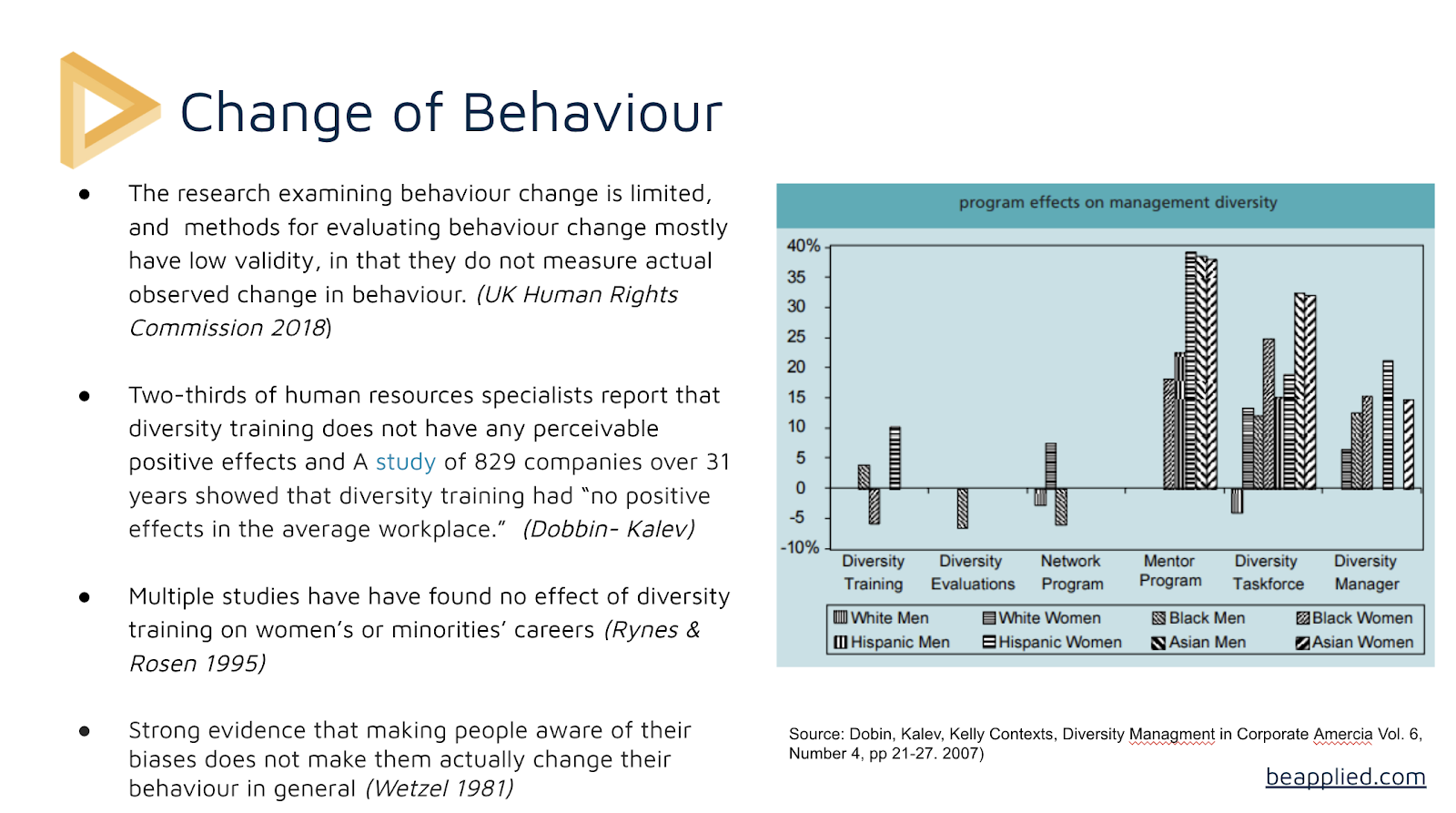

To be honest, it's really difficult to tell, because although unconscious bias training came out of the civil rights movement in the 60s/ 70s (not so much the movement itself but the corporate reaction to it), we don't actually measure observed behaviour enough.

A study of 829 companies over 31 years showed that diversity training had no positive effects in the average workplace.

And that's looking specifically at the progression of women, progression of minorities and the percentage of women and minorities in managerial positions - it’s not looking directly at if/ how behaviours changed as the result of the unconscious bias training because no one's been measuring it.

However, what has been shown is that despite having huge amounts of unconscious bias training, nothing substantial has happened.

There's strong evidence that making people aware of their biases in general - even when it has nothing to do with diversity - doesn't actually change their behaviour.

If we look at the biggest study on behaviour change (the blue chart above), you can see that the companies focused on diversity training actually saw less diversity - in this case black women in management positions

In conclusion: there's not much evidence that unconscious bias training does work. And there's lots of evidence that nothing's really happening.

Unconscious bias training could even backfire

What’s interesting is that there’s actually been more research on the negative effects of unconscious bias training than on the positive effects - which I can't really seem to get my head around.

Bias training can backfire in lots of interesting ways.

When we're looking at explicit bias, we can see how people can get grumpy and resentful that they have to do things and therefore react against it but there's actually more subtle effects as well.

Moral licensing is a really interesting concept.

People who've been given vitamins are more likely to smoke and skip exercise that evening - it's basically the concept whereby if you've done a good thing, you feel like you kind of earned your bad thing.

A study found that when given the opportunity to endorse Barack Obama (back in 2008), people were then more likely to discriminate against African Americans.

It also found that when subjects are told that their employers have pro-diversity measures, such as training, they presume that workplace is free of bias and react harshly against claims of discrimination.

So if you've done your unconscious bias training, you might feel like you're then free of unconscious bias or at least less susceptible to it.

This means that you're then more willing to make decisions which are actually contrary to the training.

Although we can, in theory, accept that we’re biased (or that we could be biased), in reality, we always back ourselves and our decision making. On average, people rate themselves less biased than most other people.

Verdict: unconscious bias training doesn’t seem to work

So there's no rigorous evidence that unconscious bias training can lead to lasting, significant, or even a noticeable shift in actual behaviour.

It doesn't really seem to change implicit biases for more than few days at a time, or eight weeks at the maximum.

There's also evidence that the training can be counterproductive to good outcomes so it's a bit dubious whether this is actually going to do any good.

But what about VR?

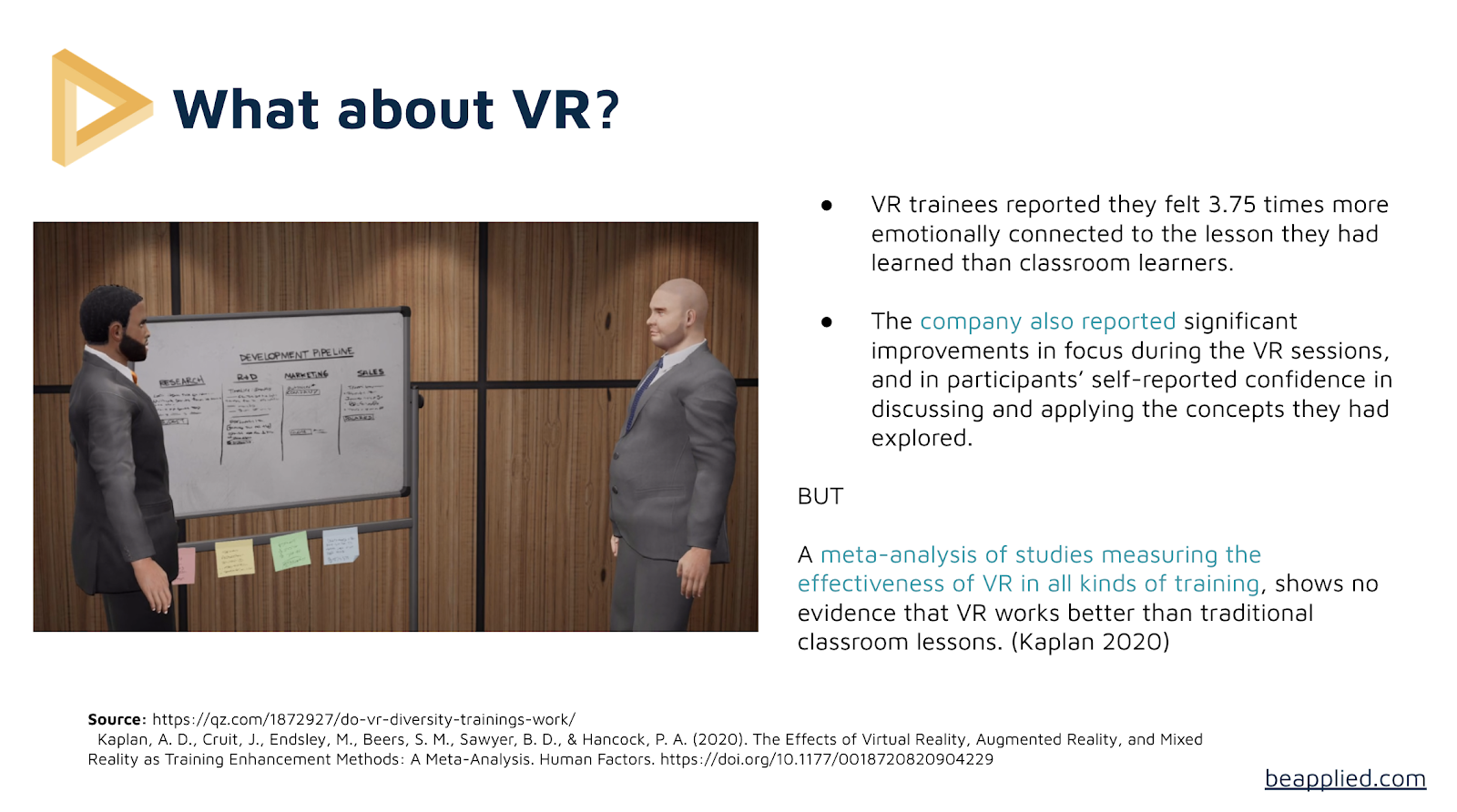

There are a lot of businesses currently spending money on diversity training with virtual reality.

I would wholeheartedly recommend everyone give this a go, and not because it necessarily works better, but because virtual reality is really good fun!

Lots of businesses have been doing VR training, the idea behind it being that if you're fully immersed in the environment - and you get to see the world through someone's else's eyes - this might have an effect.

There's some good signs that VR-based training can do some good.

VR trainers said they were 3.7 times more emotionally connected to the lesson than the classroom learners were and there were significant grooves in focus during the VR sessions reported.

Participants also self-reported increased competence in discussing these concepts, which is good.

On the other side, a huge analysis of the effectiveness of VR shows that while it's more effective at training people versus old school classroom solutions, in some ways, it may be less effective, because you can't answer questions like in the classroom.

To sum up Kaplan’s study referenced in the image above: just because it's fun and engaging, this doesn’t necessarily mean it works better, although having said that, there's no evidence, it doesn't work and there’s good reason to believe that people feel more emotionally connected.

Most of the meta analysis was looking at things like putting an aircraft together or surgery, and so it's possible that something that relies on an emotional connection may be more effective through VR, but it's just too difficult to say at present - the study only came out in 2020... so for now, I’ll completely withhold judgement on that.

Putting it all together: should you be doing unconscious bias training?

So when we look at the benefits of unconscious bias training, we're looking at raising awareness, reducing implicit bias, addressing explicit bias and changing behaviour.

It's a spectrum, of sorts, in terms of how effective it is.

If we look at changing behaviour it’s a firm no - there’s little to suggest that it will change anyone's behaviour, certainly not an organisational, large-scale level.

This may seem like a very negative conclusion, but I don't actually think it is.

I think we just need to be much more realistic about what we expected to achieve.

You need to be realistic about what you’re expecting unconscious bias training to do. If you’re thinking about using it as your primary strategy, you need to think about expense versus reward...

Dobbin - a critic of diversity training in general - pointed out that for Starbucks to actually get everyone trained, they’d need to close 1000 stores for half a day in order to train 175,000 workers (at an estimated cost of $12,000,000).

Starbucks hires 100,000 new workers each year, so they’d need to do a dozen half-day sessions every year.

Starbucks would be reducing implicit bias at a cost of $72 million a year. And now you can see how corporations manage to spend $8 billion a year on diversity training!

It's not that it can't be useful at all, it's just really expensive, time consuming and not really worth the money put in - and there are things you can do significantly cheaper, which will have a far, bigger effect.

A question people often ask is ‘why doesn't it work?’

And I guess the answer is: why would it?

This isn't meant to be flippant or facetious, but if you look at the nature of behavioural science, these deep seated biases that shape how you experience the world and the mental shortcuts you take to function everyday, can’t be undone by a bit of training - which is why no behavioural scientist has ever suggested that.

Whilst in some ways, it's really odd to think that training could ever have an impact, in another way, it's pretty natural to assume that it would. If I'm aware of my biases, they won’t affect me. That sounds reasonable enough.

Why do we do unconscious bias training when we know it doesn’t work?

Most of the people who conduct unconscious bias training correctly recognise that unconscious bias is a serious problem and then proceed to go about correcting it in the best way that they can. A lot of people do unconscious bias training in incredibly good faith.

However, there is also a case to be made that unconscious bias training is a means for organisations to cover their behinds.

The 2019 CIPD report states that diversity training is not only the most established way for employers to change behaviour and organisational climate, but also an important aspect of compliance that reduces employer liability.

This is fine - we all have to reduce our employer liability and do compliance, that's part of being a grownup business, but in the same paragraph, it also says this, which I find slightly aggravating:

It seems odd that the fact it doesn’t work is acknowledged, whilst also stating that it's a very important part of compliance - it's enough to make you a little bit cynical!

So I think the truth is: even though unconscious bias training doesn’t work, it’s still used for compliance reasons.

So is there a way of reducing bias?

If you look at how bias has been reduced successfully, it’s not by trying to change people.

It's by trying to change environments.

If de-biasing someone is in fact possible via teaching at all, it would take consistent and long-term effort over years and years.

What we do instead - or what most behavioural scientists try to do - is create an environment which makes the right choice easy through choice architecture.

You need to remove the possibility of biases getting in the way of decision-making.

So rather than telling people to eat their 5-a-day or to eat a balanced diet, for example, you’d give them the plate in the image above, which divides up the portions.

You're not trying to change what people want to eat or when they want to eat it, you’re just designing an environment that makes it easy to make the right choice.

Another example of environment-changing is instead of trying to get people to save for their pensions with simple messaging like adverts, you make it easy to save by making pension-paying the default option.

So instead of opting in, you’d have to opt out. As a result, people paying into pensions went from 49% to 86% here in the UK.

In terms of unconscious bias training, what we need to do instead is change environments, not people or how they think.

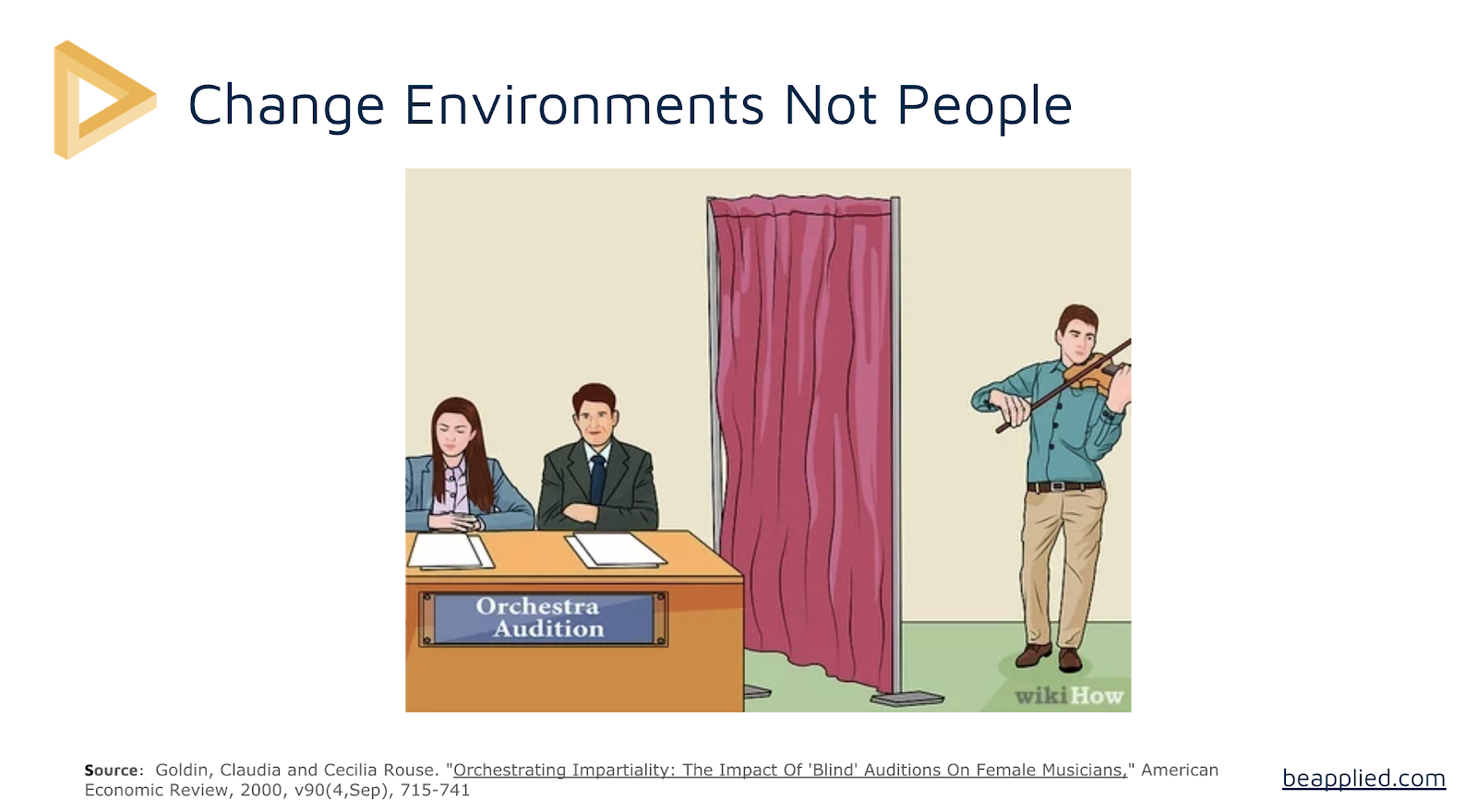

The most famous example of this in action involves an orchestra.

Back in the 70s, researchers managed to double the amount of women getting through auditions by introducing blind auditions.

If you’d tried to explain to the composers that biases prevented them from recognising good music when they heard it I’m sure they would’ve thought you were being ridiculous.

And quite often when you're dealing with line managers, who in many ways will have the same level of verified expertise as a conductor of an orchestra, you'll find that they're resistant too - it’s a bit of a losing battle.

Instead of trying to change the way people think or behave, it's much better to rearrange the environment so that you remove the possibility of that bias occurring.

How we remove unconscious bias from hiring

Here at Applied, we've created a system and a process where everything is anonymised, and everything uses wisdom of the crowd.

So rather than individual decision-making based on what people look like, hirers don’t actually know what candidates look like or what their names are.

Everything is judged based on what they can do rather than arbitrary characteristics that might encourage unconscious bias.

This approach has been much more successful than unconscious bias training: 60% of the candidates hired through our platform would not have been found with CVs.

There also tends to be a huge increase in diversity when you start to de-bias the recruitment process by anonymisation and by use work samples.

You can get the full lowdown on how we’ve de-biased the hiring process here.

Humans are biased, and the only way to mitigate against this is to design systems that make it impossible. Applied was built to remove bias from the hiring process, so that the best person gets the job, regardless of their background. To find out how we’re re-thinking the hiring process from the ground up, browse our resources, or start a free trial of the Applied platform.