.png)

Here at Applied, we’ve made it our mission to remove unconscious bias from the hiring process.

However, there’s a growing sentiment that labelling bias as ‘unconscious’ is just a way for organizations to absolve themselves from both blame and responsibility.

Those who make this argument believe that by calling bias ‘unconscious’, people are making excuses for what is actually just bigotry.

So, we’ve broken down the science behind unconscious bias and tackled this critique head-on...

Can bias ever be ‘unconscious’?

The short answer is yes.

All of the research around bias tells us that it can be (and very often is) something that we do without being aware of it.

We have a natural tendency to gravitate towards those most like ourselves and resist the unfamiliar.

Our brain makes 1000’s of micro-decisions every day.

And to lighten this load, our brain’s decision-making uses two systems - one fast thinking and one slow thinking, a theory popularized by Daniel Kahneman’s ‘Thinking Fast and Slow’.

System 1: used for everyday, intuitive decisions - like how much milk to put into your morning coffee or when to cross the road - essentially being on autopilot.

System 2: used for bigger, more important decisions like planning a one-off trip or working on a big presentation. System 2 thinking is generally slower and more mentally taxing.In the face of these 1000s of decisions, your brain uses shortcuts and patterns to draw conclusions - relying on subconsciously stored information like your past experiences or things you’ve seen in the news.

In the face of these 1000s of decisions, your brain uses shortcuts and patterns to draw conclusions - relying on subconsciously stored information like your past experiences or things you’ve seen in the news.

However, these shortcuts are often lead to unconscious bias.

The ‘checker shadow illusion’ was developed by Edward H. Adelson, Professor of Vision Science at MIT in 1995.

Take a look at the image below.

What if we told you that square A and B are actually the same colour?

Our brains use rough and ready rules to break down visual information into meaningful outlines of objects.

Most of the time this works well, but can also mean we see what we expect to see, rather than what is actually in front of us.

This tendency to draw patterns and rapid-fire associations is often labelled as ‘gut instinct’.

Although this instinct is absolutely necessary for us to simplify and make sense of our complicated world, it also comes with negative side effects.

Once we start looking at decisions where people are involved - we can begin to see how unconscious bias can have more serious consequences.

When it comes to hiring, for example, we make snap judgments about people based on this ‘gut instinct’, which is really just unconscious bias.

Whilst this might explain some degree of outcome disparity, what about big diversity gaps?

As Bruce Kasanoff writes in this Forbes article:

“If you are ignoring talent in large portions of the human population, then the results of your biases will be obvious. I'm not talking about circumstances in which 51% of the local workforce is female and 47% of your senior managers are female; I'm talking about the fact that, say, 18% of your senior managers are female.”

The problem with this view is that unconscious bias ≠ not noticing you have a diversity problem.

You can be very aware of diversity issues (and even have the motivation to do something about it) but still have unconscious bias.

You can be aware and willing to do something about your majority white male management team (which would be a decision made by system 2)...

But then when it comes to sifting through applications and making individual hiring decisions, it’s easy to fall back into our shortcut-based system 1 thinking.

Of course, there will also always be people who are explicitly biased.

However, it’s safe to assume that the more senior a position is, the bigger a ‘risk’ that hire is, given that there’s generally more money and responsibility on the line.

And the higher the stakes are, the more risk-averse hirers are likely to be.

We know that people are naturally inclined to warm to those most like themselves and avoid the unfamiliar.

So, it is possible (although of course not excusable or acceptable) for leadership teams to be fairly homogeneous without necessarily being explicitly prejudiced.

This is most likely why we tend to see fewer women (as well as minority background talent) the higher up the corporate ladder we climb.

Labelling bias ‘unconscious’ doesn’t mean we don’t have to address it

So, we know that unconscious bias is real.

But, as Diversity and Inclusion facilitator Bilal Khan says in this Metro article:

“We must be careful when we label things ‘unconscious’.”

He then goes on to say:

“From a personal perspective, if someone blames their unconscious bias for something, it makes me feel as though they are actually not recognizing their part in a wider system that is putting me at a disadvantage.”

This outlook is warranted…

However, it assumes that labelling bias as ‘unconscious’ means its inevitable and therefore can’t be fixed.

And this is simply not the case.

To effectively remove bias from decision-making and change real-world outcomes, we first have to understand the root cause of discrimination.

We can then design better systems and processes that reduce bias.

Yes, there may be some bad-faith actors who are explicitly biased - needless to say, these people should be removed from decision-making processes.

But pointing to individuals as the cause of widespread discrepancies in outcomes is as much as a cop-out as organizations blaming unconscious bias.

Rooting out a handful of bad apples isn’t going to have any measurable effect on outcomes.

The reality is: everyone is biased… but this doesn’t mean we don’t have a responsibility to address this bias.

Bias itself may be unconscious (at least for the most part) but organizations have to make a very conscious effort to remove this bias.

Changing outcomes by designing better processes

Much of the backlash against the concept of unconscious bias is due to the ineffectiveness of unconscious bias training.

This kind of training doesn’t work for one simple reason: it’s near-impossible to debias humans.

A study of 829 companies over 31 years showed that bias training had no positive effects in the average workplace.

As you can see from the chart below, the companies focused on diversity training actually saw less diversity in terms of black women in management positions.

So if training isn’t effective, how can we combat bias?

Well, if we look at case studies of how bias has been successfully mitigated, it’s not by training it out of people, it’s by changing environments.

You can’t re-wire the human brain, but you can re-design the environment in which we make decisions - a concept known as ‘choice architecture’.

In one of the most famous examples of this in action, researchers were able to double the number of women getting through orchestra auditions by making them ‘blind’.

By applying this same idea of designing bias-free environments, we can make a genuine impact on hiring outcomes too.

Rather than attempt to change the human nature of hirers themselves, we simply remove the information that would lead to biases being triggered.

This includes things like:

- Photos

- Names

- Addresses

- Dates of birth

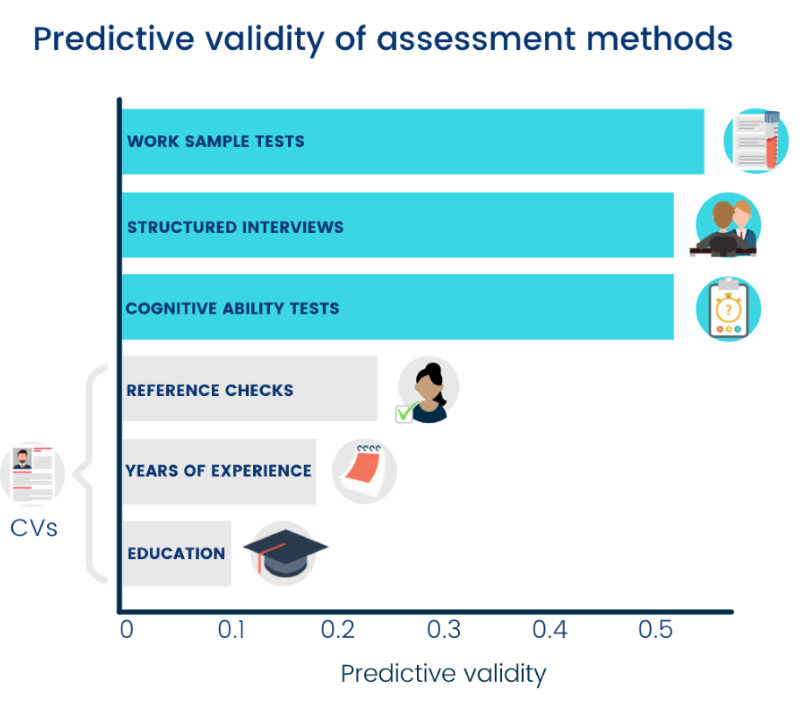

We also took this a step further by replacing CVs altogether in favour of skill-based assessments.

Why? Because not only do someone’s education and experience paint a picture of their background, but they’re also poor predictors of future job performance.

This doesn’t mean education and work experience have no value whatsoever… but we’d rather test for skills learned through experience rather than for experience itself.

Testing skills upfront and completely anonymizing backgrounds means that every candidate gets a fair and equal chance to showcase their skills, no matter how or where they acquired them.

We know this approach works - 60% of hires made through our process would’ve been missed via a traditional process, often from minority backgrounds.

Even when intentions are pure, our brain’s natural inclination to take shortcuts means that the outcomes don’t always match our original intent.

So, it’s easier to create a process that reduces this than to change how our brains work.

Bias isn’t a sinister boogeyman to be overcome and defeated.

It’s a reality of the human condition that we have an obligation to design around if we want to build a fairer world.

Final word

Whilst some organizations may use unconscious bias as an excuse or smokescreen for intentional discrimination, there’s no evidence to suggest that they are in the majority.

We can prove unconscious bias exists - but its unintentional nature doesn’t excuse us from having to tackle it.

The fact that organizations spend millions of dollars on training every year - without outcomes changing as a result - is a testament to the impact of unconscious bias.

There’s no reason to believe these organizations have anything other than good intentions.

But awareness and training alone aren’t enough to make genuine change.

In an ideal world, all hiring decisions would be made slowly and carefully using system 2 but in reality, this is simply too much to ask of hirers, since our system 2 decision-making only has limited capacity.

Since we have fairly little control over which system we actually use at any given time, the most tried-and-tested means of reducing bias is to design environments that make it impossible - or at least much harder - to be biased.

Rather than entrench ourselves in the debate around how conscious or unconscious decisions are (something that psychologists and philosophers have grappled with for centuries) we have the behavioural science know-how to design processes that mitigates both kinds of bias.

Applied is the essential platform for debiased hiring. Purpose-built to make hiring empirical and ethical, our platform uses anonymized applications and skill-based assessments to identify talent that would otherwise have been overlooked.

Push back against conventional hiring wisdom with a smarter solution: book in a demo of the Applied recruitment platform.

.png)

.png)