How can you prove your organization's hiring is fair and ethical?

Below, we'll show how to achieve a truly inclusive and data-driven recruitment process at your non-profit, without spending a penny.

We've found that 60% of those hired using the practices below would've been missed via a traditional recruitment process - mostly from ethnic minority backgrounds.

Psst. Are you hiring for your non-profit? Find out how we can help you build an ethical, mission-driven hiring process here.

What is ethical hiring?

Ethical hiring - at its core - means assessing candidates without discrimination.

It doesn't matter how good your intentions are - we are all subject to unconscious biases that affect our judgment.

This is why even in the third sector, organizations struggle with diversity.

So, we have to implement ethical hiring practices that mitigate this bias and ensure every candidate gets a fair chance.

The effects of bias are very much real and measurable.

Candidates from minority backgrounds tend to be disproportionately disadvantaged...

And so systemic inequality is perpetuated and organizations miss out on talent.

Why are ethical recruitment practices necessary? What about training?

Since much of our bias is unconscious, pure intentions alone won't change outcomes.

Bias isn't something that we can rid ourselves of completely - it's part of the human experience.

This is why all of the research around unconscious bias training points to the conclusion that it's pretty ineffective at changing behaviour.

A study of 829 companies over 31 years showed that bias training had no positive effects in the average workplace.

Although you can't train bias out of a person, you can make changes to the process itself that will reduce this bias.

How does bias occur?

Every day, we make 1000s of micro-decisions... and we simply don't have the mental bandwidth to dedicate the same amount of time and consideration to every single one of them.

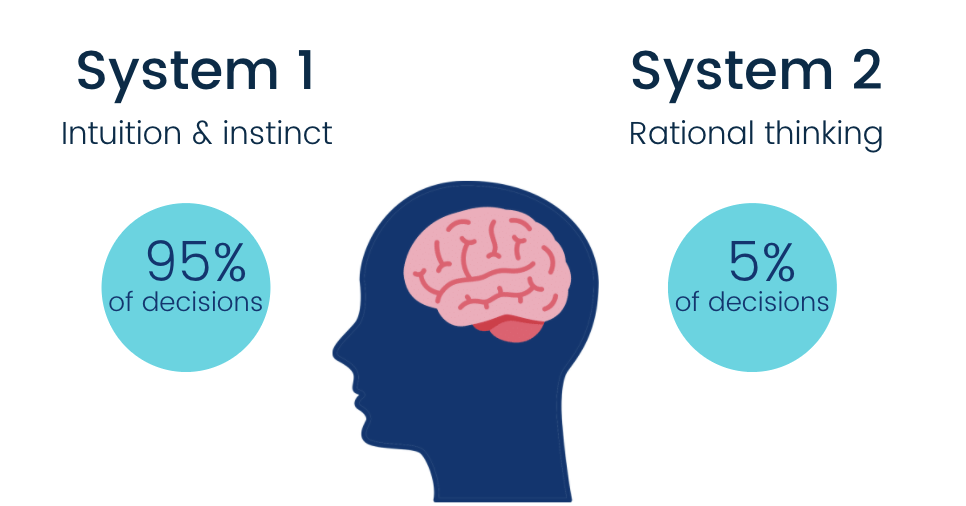

To lighten this load, our brain uses two systems for decision making.

System 1: this is like being on autopilot, using mental shortcuts to make everyday decisions like our route to work. Our brain falls back on past experience to guide our journey without having to think too hard.

System 2: this is for one-off, more important decisions, like planning a project at work. This system of thinking tends to be more conscious and requires more effort.

When tired, stressed or faced with too many decisions, we have a tendency to fall back on System 1, when we probably should be using System 2.

And since System 1 relies heavily on vague associations and shortcuts, it can lead to bias.

This doesn't necessarily mean that System 1 is inherently sinister, it helps us make sense of and simplify a world brimming with stimulus.

However, if we look at hiring, for example, instinct-based decision making is often both inaccurate and unfair.

If you were to review a stack of CVs, you're likely to default to using System 1 - which uses information subconsciously stored in your mental locker, sourced from our past experiences, what we've seen in the media and stereotypes.

1. Start by anonymizing applications

The more we know about a candidate, the more grounds for bias there are.

We may unconsciously lean towards someone who we believe is similar to ourselves (or away from someone who is not).

This is why your name can have a significant impact on your chances of getting a callback - no matter where you are in the western world.

What information should you anonymize?

When we look at a CV, it tells us more about a person's identity than it does their skills.

Here at Applied, we've done away with CVs completely (which we'll get to shortly), but if you're not ready to take that step, we'd recommend starting with any information that can be used to build a picture of a candidate's background.

This includes:

- Name

- Photo

- Address

- Date of birth

- Hobbies

Removing this identifying information is an essential first step towards an ethical recruitment process.

Whilst unconscious bias can creep into every step of the hiring funnel, this will ensure that every candiate will at least have their application equally considered, regardless of background.

Why is anonymization ethical? Someone's ethnicity, gender and age can all affect how we perceive them, so it's best to simply remove this information altogether.

2. Consider swapping CVs for skill-based assessments

To achieve truly ethical hiring, we have to go a step further than anonymization.

Imagine you have a candidate’s CV in front of you…

You’ve now covered any identifying information, so what’s left?

Education and work experience.

The problem here is that if we simply assume that the best candidates come from the most prestigious universities and have experience at big-name companies, then candidates from underprivileged backgrounds are always going to be overlooked.

We know that biases tend to lead to these people being missed in traditional hiring processes.

So how can we expect them to have the background we usually look for?

You can read our Full Guide to Third Sector Recruitment here.

Using work sample questions

Instead of CVs, we use 'work samples' to screen candidates...

Work samples are interview-style questions designed to the specific skills required for the job - and are one of the most predictive assessments we have at our disposal (which means that they can accurately predict hoe someone will perform in the role).

They take a realistic task or scenario that candidates would encounter in the role and ask them to either perform the task or explain how they would go about doing so.

The idea is to simulate the role as closely as possible by having candidates perform small parts of it.

Work samples are similar to your typical ‘situational question’ except they pose scenarios hypothetically - focussing on potential over experience.

Below is an example for a Fundraising role:

Rather than ask candidates to tell you about their experience and how they translate into skills, work samples put these skills to the test by getting candidates to think as if they’re already in the role.

Testing for skills using work samples means candidates can actually demonstrate their skills, rather than have their applications accepted or rejected based on assumptions made via their background.

Why are work samples ethical? They offer ever candiate to showcase their skills, regardless of where or how they acquired them.

3. Data-proof your hiring with scoring criteria

Where most hiring processes go wrong is not having any means of quantifying who the best person is. Instead, the person who hirers ‘like’ the most is hired.

To data-proof your hiring, you’ll first need to give each screening and interview question a review guide/scoring criteria.

Not only does scoring criteria give you a way of objectively ranking candidates - but also provides you with the data to prove you're hiring in the most ethical way possible.

At Applied, we use a 1-5 star scale to score each question.

This scale is accompanied by a few bullet points, detailing what a good, bad and mediocre answer would include.

Whilst most ethical hiring practices you’ll find online will tell you how to avoid explicit discrimination, data-proofing your process will enable you to get the best person for the job.

You can use candidate’s scores to provide useful, objective feedback and show candidates that they were assessed fairly against the skills needed for the job.

Hiring decisions won’t be made on the candidates you ‘felt’ were the best...

You can simply offer the job to the highest scorer.

Why is scoring criteria ethical? It ensures decisions are made based on criteria, not bias.

4. Structure your interviews

A structured interview is where all candidates are asked the same questions in the same order.

The idea is to make interviews standardized so that candidates are being compared fairly and against specific criteria.

Since questions are pre-set, structured interviews ensure that all questions are relevant to the job, keeping background out of the decision-making process as much as possible.

The questions you choose to ask also matter.

The most ethical way to approach interview questions is to ask forward-looking questions.

This means asking questions hypothetically, rather than questioning candidates about their experience.

If there’s a skill you’d like to test, simply think of a scenario that would test this skill (just like you would for the work sample screening questions) and ask candidates how they’d tackle it.

Posing questions in this way shifts the focus from background to potential.

Whilst previous experience and education may well make for the best answers, forward-looking questions make no assumptions so that every candidate is given a fair shot.

You can also use what we call ‘case study tasks’.

Case studies present candidates with a larger task to work through. You lay out all of the context and show candidates any relevant material (charts, user personas, briefs etc) and then ask a series of follow-up questions to get an idea of how they’d think and work through the task if they were to get the job.

Below is a case study we used for a Digital Marketer role:

Question: Below is some fake data to discuss. To meet our commercial targets we think we need to increase our conversions from 90/month to 150/month. Below are some fake funnel metrics and website GA data. With a view to meeting this objective, talk through the above data and what it might mean.

Follow-up 1: What additional data would you need to work out how to meet the objective?

Follow-up 2: Given the objective, where would you concentrate your marketing efforts? Is there anything that you would do immediately? Where is the worst place to spend your time, given what you see in the data?

Our memories are far from perfect - so take notes!

Just like your screening questions, each interview question should have its own scoring criteria.

It’s recommended that interviewers take notes so that they can score candidates immediately following the interview or even score answers in the interview itself.

Why? Because our memory is easily warped.

For example, we’re more likely to remember intense moments and the end of moments of an experience more vividly.

These snapshots dominate the actual value of an experience - a bias known as the ‘peak-end effect’.

One study put this to the test - asking participants to take part in two trials which involved putting their hand into uncomfortably cold water.

Trial 1: Place hand in 14° water for 60 seconds.

Trial 2: Place hand in 14° water for 60 seconds and then 15° water for 30 seconds

Participants reported that despite being uncomfortable for longer, they found Trial 2 less painful since the end of the experience was less painful.

When it comes to hiring, it's likely that candidates whose interviews had high points or ended well may be unfairly favoured, which is why scoring interviews as soon as possible afterwards (or ideally during) is advised.

Below is an example of how a question is scored at Applied.

Why are structured interviews ethical? Making interviews uniform means like is compared to like, and irrelevant, bias-prone tangents are minimized.

5. Use interviewer panels to negate bias

Even with structured interviews and forward-looking questions, there will still be bias at play.

A single interviewer will have their own biases which will likely affect their scoring (although this would still be much fairer than a traditional interview).

The most effective means of mitigating this bias is to use multiple reviewers.

By having a new three-person panel for each round, any individual biases will be averaged out over the course of the process.

And the more diverse your panels, the fairer the scores will be.

This isn’t just a more ethical hiring practice, it’s also more empirical.

Collective judgement is generally more accurate than that of an individual - a phenomenon known as ‘crowd wisdom’.

Why are interview panels ethical? They reduce bias by averaging out scores.

Applied is the essential platform for fairer hiring. Purpose-built to make hiring ethical and predictive, our hiring platform uses anonymised applications and skills-based assessments to improve diversity and identify the best talent.

Start transforming your hiring now: book in a demo or browse our ready-to-use, science-backed talent assessments.

.png)

.png)