.webp)

In this article:

- What is a structured interview?

- Structured Interviews: Advantages and Disadvantages

- Structured Interview Example Questions

- How to conduct structured interviews

- FAQs

FACT: Structured interviews are fairer and more predictive than unstructured interviews.

Forget about trick questions, informal chats and ‘recruiter instincts’, this is how to turn your interviews into a data-backed science.

What is a structured interview?

A structured interview is where all candidates are asked the same questions in the same order. During structured interviews, candidates will answer a prepared set of interview questions from the interviewer. The idea is to make interviews standardised, so that apples can be compared to apples.

Difference between unstructured, semi-structured and structured interviews:

Structured Interview Advantages

Structured interviews are more predictive of ability

The first reason to make the switch to structured interviews is a pretty straightforward one: they’ve been proven to be more effective than unstructured interviews.

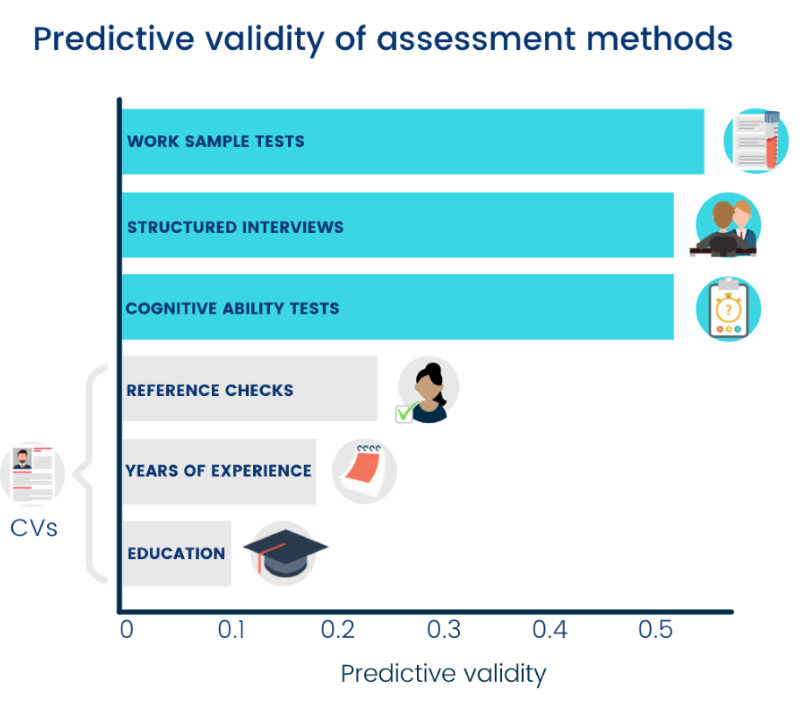

Below are the results of a famous metastudy on the predictivity of assessment methods.

As you can see structured interviews were found to be significantly more effective than unstructured interviews.

Why is this?

Well, by making interview techniques more uniform and using predetermined questions, it makes it easier to objectively compare candidates.

By knowing what you’re looking for before the interview starts, you’re able to identify who meets the criteria and who doesn’t.

It’s also fairly safe to assume that unstructured interviews involve a degree of irrelevant questioning regarding candidates’ education, experience and interests - which you’ll also notice ranking at the bottom of the chart above.

Structured interviews reduce bias

Structured interviews play a crucial role in mitigating bias within the interview process. A common misconception about bias is that it’s something perpetrated by a minority of ‘bad apples’.

Whilst some hirers may be more biased (or just more explicitly biased) than others, the truth is that we’re all biased.

It’s an unavoidable part of being human.

Here are just a handful of biases that could affect interviewing:

- Stereotype bias: when we believe certain traits are true of a given group of people.

- Confirmation bias: we naturally look for information that confirms what we already believe.

- Halo effect: a good first impression can heavily distort how we remember an experience.

- Contrast effect: we look to compare things rather than judge them on their merit

You can read more about interview bias here.

The more structure you add to your interviewing process, the more bias you can remove.

If unstructured interviews delve into people’s backgrounds and are largely go-with-the-flow affairs, then it’s not hard to imagine how interviews might take a shine to certain candidates based on their biases, rather than the candidate's suitability.

- Did the candidate go to the same university as you?

- Did they make a good first impression?

- Did you interview them at the start of the day, on a full stomach?

- Did you find them attractive?

All of these factors can and often do affect how we perceive candidates.

Whilst not all of these can be solved with a structured interview alone, we can take steps to design away much of this bias.

Structured interviews save time

A fixed set of questions with predetermined scoring criteria eliminates the need to create new questions for each candidate. They make interviews time-efficient as interviewers focus on answers that showcase a candidate's skills and are easier to compare objectively, accelerating the decision-making process.

Candidates prefer structured interviews

Knowing what to expect and having clear evaluation criteria and feedback can make structured interviews a more positive experience for jobseekers. We’ve seen this firsthand at Applied, over 800,000 candidates have been interviewed via the Applied method and rated the process positively.

Structured Interviews Disadvantages

While structured interviews offer several strengths, there are some disadvantages.

Interviewers need to put in more effort at the beginning stage of the hiring process.

It takes time to create skill-based interview questions, however, in the long run, interviewers save time by having a structured framework. Applied has a dedicated customer service team and a database of pre-written questions to help determine the best skills-based questions for your open role.

Structured interviews may not cover every aspect of a candidate's suitability for a role.

While It may seem like a good idea to delve deeper into a candidate's skill set, adding free-form questions during an interview creates space for our biases to creep in.

By sticking to the same interview questions across candidates, hiring teams can be assured they’ve selected the right candidate for the role based on skills rather than personal biases or favouritism, ultimately ensuring a more objective and fair evaluation process.

At Applied, we agree that the benefits of structured interviews far outweigh any weaknesses.

Structured Interview Example Questions

Here are the three types of questions we ask candidates here at Applied:

1. Work sample questions

An example work sample for a sales role:

Question: You haven't had a sale in x months but you know it's just been an unusual period and you think you're in a good place for the future. Your sales manager wants to help you look at your pipeline and activity levels. What do you do?

Explanation

Work samples aren’t too dissimilar from your typical ‘tell me a time when…’ interview question.The key difference is that they’re posed hypothetically.You simply explain the context of the scenario and ask candidates how they’d respond.

This enables candidates who haven’t experienced a given scenario before to showcase their skills.It also acknowledges that some candidates may handle something different today than they would have in past.Work samples can be based on both dilemmas or everyday tasks.

The best work samples are those which are the most reflective of real life.

2. Case studies

Example case study questions for a Digital Marketer role:

Question: Below is some fake data to discuss. To meet our commercial targets we think we need to increase our demo requests from 90/month to 150/month. Below are some fake funnel metrics and website GA data. With a view to meeting this objective, talk through the above data and what it might mean.

Follow-up 1: What additional data would you need to work out how to meet the objective?

Follow-up 2: Given the objective, where would you concentrate your marketing efforts? Is there anything that you would do immediately? Where is the worst place to spend your time, given what you see in the data?

Explanation

Case study interview questions give candidates a bigger task to think through. This is an opportunity to present candidates with a real (or near enough real) project that they’d actually be working on.

After giving them the context, you can ask candidates a series of follow-up questions to see how well they understood the task and what their approach would entail.

Although you’re in a structured interview, you can still ask additional questions to help candidates explore their ideas - just make sure every candidate is afforded this help.

3. Job simulations

Examples of job simulation tasks we’ve used

- Client meeting

- Presentation

- Sales pitch

- Case study interview

Explanation

Job simulations take parts of the role and ask candidates to perform them.

Whilst not appropriate for every role, job simulations are well suited to client-facing roles that require skills that are hard to test via regular interview questions.

As you can probably tell, these types of questions are designed to test purely for skills.

Industry-specific experience is valuable, but these questions test for skills learned through experience rather than hiring people for their experience alone.

This means that talented candidates from outsider backgrounds get a fair chance to show off their skills, as well as minority background candidates who struggle to gain experience due to hiring bias.

How to conduct structured interviews

1. Decide on the skills you’re looking for

This step sounds simple but is critical. To make fair, data-driven hiring decisions, you’ll first need to define what you’re going to be testing for.

Usually, hirers might outline some vague characters or experience requirements they’d desire, but this isn’t going to cut it!

Instead, simply jot down 6-8 core skills needed for the role.

These can be a mix of hard and soft skills (eg SEO and strategic thinking).

This distinction is important because you want to be defining a skill set rather than a type of person.

In the latter case, candidates who don’t ‘fit the bill’ are likely to be unfairly overlooked.

If you match each interview question to one or two of the skills you’re looking for, you’ll be able to map out each candidate’s strengths and weaknesses throughout the process.

2. Ask questions that test skills, rather than background

A structured interview will only get you so far if you’re asking the wrong questions.

Traditional interview questions generally revolve around candidates’ past work experience and what they believe their strengths to be. These might be multiple choice questions, open or close-ended questions, and generally probing questions to assess a candidate’s skills and experience - but we have a few more specific examples we find work rather well.

The root issue with unstructured interviews is that they don’t test candidates’ skills, they just ask about them.

Skills should be the only thing that matters. Not where someone studied, where they’ve worked or what they do with their weekends.

Whilst these things could have an impact on a candidate’s skills, these alone can’t tell you who the best person for the job is - they’re just proxies for the skills you’re looking for.

3. Create scoring criteria

This is where data comes in!

To make data-based decisions, you first need to collect data. For each structured interview question, score candidates' answers out of 5.

To make sure you’re staying as objective as possible, we recommend writing a rough and ready review guide for each question.

This needn’t be any more in-depth than a few bullet points describing what a good, mediocre and bad answer would include.

Here’s the review guide we used for the sales interview question above:

Using a review guide enables you to overlook elements of a candidate’s personality or identity that you might otherwise be put off by, albeit subconsciously.

It also ensures that each answer is judged on its merit so that a good or bad answer early on doesn’t overshadow the rest of the interview.

You can still make small talk with candidates to put them at ease and answer any questions, but scoring in this fashion will ensure that this doesn’t impact a candidate’s chances of being hired.

At the end of each interview wound, you’ll be able to either add up or average out candidates’ scores to build a leaderboard.

You can grab our free Interview Scoring Worksheet here.

3. Try three-person interview panels

Crowd wisdom is the general rule of thumb that collective judgement is more accurate than that of an individual - two heads are better than one!

For the most accurate interview scores, we’d recommend having three interviewers (any more and that and you’ll see diminishing returns).

At Applied, we have a new panel for each interview round, so that any biases of a single interviewer are averaged out throughout the process.

The more diverse your panels, the more objective the scores will be.

If you’re scoring against criteria, you can get team members from other functions to help score candidates - we’ve found this diversity of perspective adds real value.

Being interviewed by three people can be a daunting prospect.

Interviews are scary enough as it is.

So, the best practice would be to divide the interview questions among the interviewers, this way there are no silent judges ominously staring at candidates.

FAQ Summary

What are structured interviews?

A structured interview is where all candidates are asked the same questions in the same order. They are used to de-bias interviewing and to support equality and diversity in the recruitment process.

Why use structured interviews?

Structured interviews remain a valuable tool for minimising bias, increasing reliability, and enhancing the overall quality of the hiring process. By employing standardised questions, they ensure a fair assessment of candidates' qualifications and skills.

How can Applied help me conduct structured interviews?

Applied's applicant tracking system provides a comprehensive solution to facilitate structured interviews. Easily create a set of predetermined questions for each candidate to test specific competencies and skills required for the role.

With Applied, all applicants are evaluated using the same questions in the same order.

What types of companies use structured interviews?

Structured interviews are employed by a wide range of companies across various industries. Perhaps the most well-known company that uses structured interviews is Google. Typically, companies that prioritise fair and consistent hiring processes, seek to reduce bias, and value objective evaluations are more likely to adopt structured interviews.

We built Applied to assess candidates purely on their ability to do the job and remove unconscious bias from the hiring process to find the best person for the job, every time.

Our blind hiring platform uses behavioural science to make the process as predictive and fair as possible, using research-backed assessment methods to source, screen and interview. To find out how all this is done, feel free to request a demo or browse our ready-to-use interview questions and talent assessments.

.png)

.png)